To be fair, the protein folding thing is legitimately impressive and an actual good use for the technology that isnt just harvesting people's creativity for profit.

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

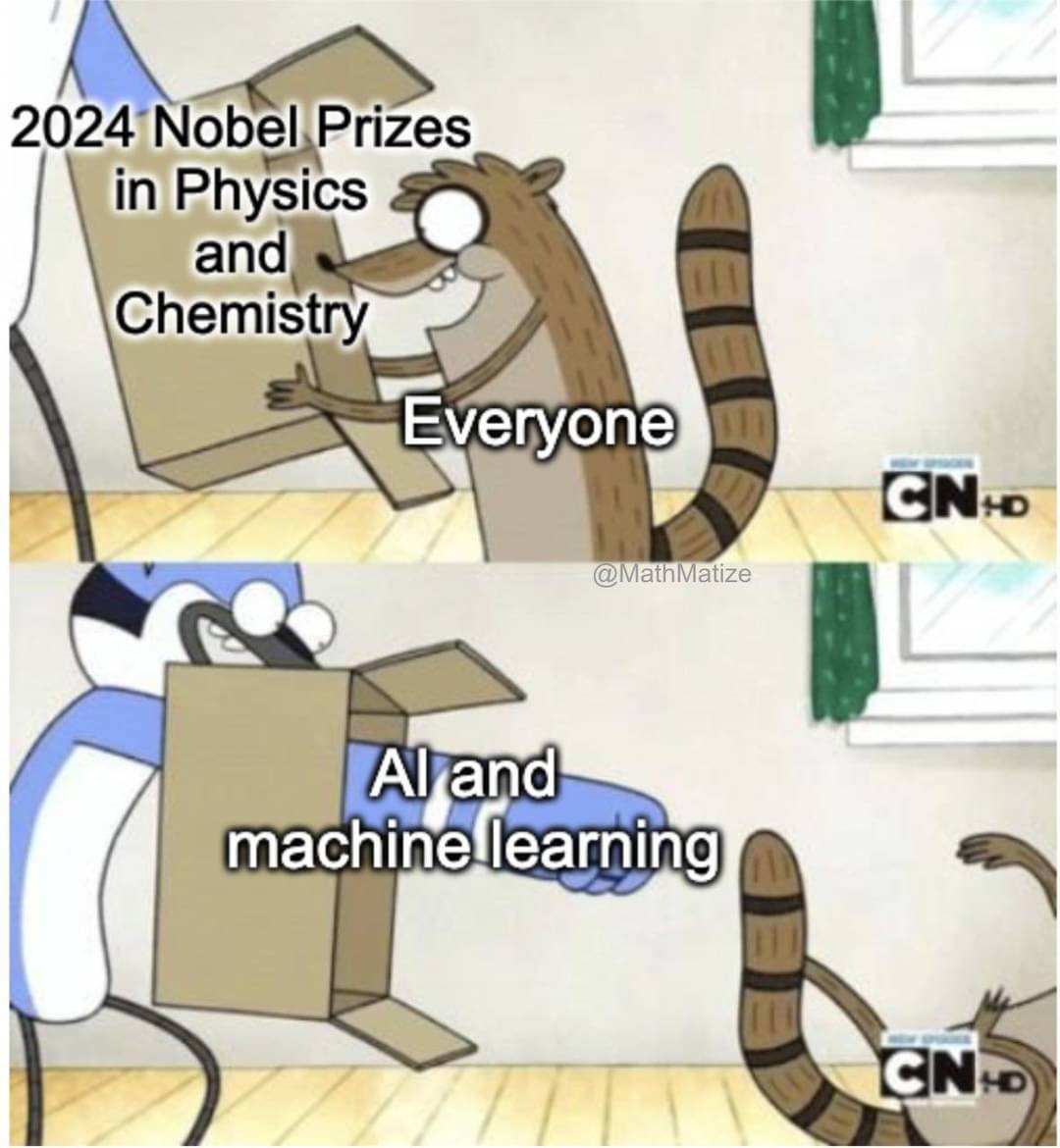

The way to tell so often seems to be if someone has called it AI or Machine Learning.

AI? "I put this through chatgpt" (or "The media department has us by the balls")

ML? "I crunched a huge amount of data in a huge amount of ways, and found something interesting"

Actually I endorse the fact that we are less shy of calling "AI" algorithms that do exhibit emergent intelligence and broad knowledge. AI uses to be a legitimate name for the field that encompasses ML and we do understood a lot of interesting things about intelligence thanks to LLMs nowadays, like the fact that training on next-word-prediction is enough to create pretty complex world models, that transformer architectures are capable of abstraction or that morality arise naturally when you try to acquire all the pre-requisites to have a normal discussion with a human.

Yeah my class in college was just called Artificial Intelligence

omg what is even the point of scientific progress and the advancement of human knowledge unless one specific person gets all the glory. What is science even for if not the validation of some human's individual ego.

The whole "all AI bad" is disconnected and primitivism.

John J. Hopfield work is SCIENCE with caps. A decade of investigations during the 80s when computational power couldn't really do much with their models. And now it has been shown that those models work really good given proper computational power.

Also not all AI is generative AI that takes money out of fanfic drawers pockets or an useless hallucinating chatbot. Neural networks are commonly used in science as a very useful tool for many tasks. Also image recognition is nowadays practically a solved issue thanks to their research. Proteins folding. Dataset reduction. Fluent text to speech. Speech recognition... AI may be getting more track nowadays because the generative AIs (that also have their own merit, like or not) but there is much more to it.

As any technological advance there are shitty use cases and good use cases. You cannot condemn a whole tech just for the shitty uses of some greedy capitalists. Well.. you can condemn it. But then I will classify you as a primitivist.

Scientific theory that resulted in practical applications useful to people is why the nobel prize was created to begin with. So it is a well given prize. More so than many others.

Agreed. Which is why we should call it Machine Learning (or Data Science) and continue to torch OpenAI until it is no more.

'AI hate' is usually connected with insane claims like 'we have "reasoning" model'.

That shit needs to die in fire.

I'm still waiting for the full-planet weather model. That will be something.

I'm still waiting for the full-planet weather model. That will be something.

That's going to be a hard one, given that past weather patterns are increasingly not predictive of future weather patterns, because something keeps dumping CO2 into the atmosphere and raising the global temperature

Ooooopsieeees it was me 🙈

Generative AI is really causing a negative association with AI in general to the point where a proper rebranding is probably in order.

Generative AI is part to AI. And it has its own merits. Very big merits. Like or not it is a milestone on the field. That it is mostly hated not because it doesn't work but because it does.

If generative AI could not create images the way it does I assure you we wouldn't have the legion of etsy and patreon painters complaining about it.

The nobel prize is not to generative AI, of course, it's about the fathers of the fields and their complex neural networks that made most advanced since then possible.

Let's start by not calling it AI anymore. Cause it isn't.

It has been called like that since the 50s were it could do literally nothing because computer power wasn't enough. It is the field that leads to an artificially created intelligence. We never had any issues with the name. No need for a rebrand.

You sir or madam give me hope that there are still reasonable people on the internet. Well written.

Wait, are you an AI bot defending itself...?

I don't get the ai hate sentiment. In fact I want ai to be so good that it steals all our jobs. Every single "worker" on the planet. The only job I don't think they can steal is that of middle management because I don't think we have digitized data on how to suck your own dick. After everybody is jobless, then we would be free. We won't need the rich. They can be made into a fine broth.

Sarcasm aside, I really believe we should automate all menial jobs, crunch more data and make this world a better place, not steal creative content made by humans and make second rate copies.

The problem with AI isn't the tech itself. It's what capitalism is doing with it. Alongside what you say, using AI to achieve fully automated luxury gay space communism would be wonderful.

I don't know if you've been paying attention to everything that's happened since the industrial revolution but that's not how it's going to work

The problem is that it will be the rich that are the owners of the AI that stole your job so suddenly we peasants are no longer needed. We won't be free, we will be broth.

Then you have a choice.

Option 1. Halt scientific and technological progress and be robbed anyway because if capitalists do not get more money out of tech they are getting it out of making you work more hours for less money.

Option 2. End capitalism.

I vote option 2

Well you see 100 people won't be able to make soup of trillions. But you know what we a trillion people can do? Run the guillotine for a 100 times

Well you see 100 people won’t be able to make soup of trillions.

You should check out the climate catastrophe sometime.

Had me in the first half, not gonna lie

I don't get the ai hate sentiment.

I don't get what's not to get. AI is a heap of bullshit that's piled on top of a decade of cryptobros.

it's not even impressive enough to make a positive world impact in the 2-3 years it's been publicly available.

shit is going to crash and burn like web3.

I've seen people put full on contracts that are behind NDAs through a public content trained AI.

I've seen developers use cuck-pilot for a year and "never" code again... until the PR is sent back over and over and over again and they have to rewrite it.

I've seen the AI news about new chemicals, new science, new _fill-in-the-blank and it all be PR bullshit.

so yeah, I don't believe AI is our savior. can it make some convincing porn? sure. can it do my taxes? probably not.

You are ignoring ALL of the of the positive applications of AI from several decades of development, and only focusing on the negative aspects of generative AI.

Here is a non-exhaustive list of some applications:

- In healthcare as a tool for earlier detection and prevention of certain diseases

- For anomaly detection in intrusion detection system, protecting web servers

- Disaster relief for identifying the affected areas and aiding in planning the rescue effort

- Fall detection in e.g. phones and smartwatches that can alert medical services, especially useful for the elderly.

- Various forecasting applications that can help plan e.g. production to reduce waste. Etc...

There have even been a lot of good applications of generative AI, e.g. in production, especially for construction, where a generative AI can the functionally same product but with less material, while still maintaining the strength. This reduces cost of manufacturing, and also the environmental impact due to the reduced material usage.

Does AI have its problems? Sure. Is generative AI being misused and abused? Definitely. But just because some applications are useless it doesn't mean that the whole field is.

A hammer can be used to murder someone, that does not mean that all hammers are murder weapons.

When I hear "AI", I think of that thing that proofreads my emails and writes boilerplate code. Just a useful tool among a long list of others. Why would I spend emotional effort hating it? I think people who "hate" AI are just as annoying as the people pushing it as the solution to all our problems.

it’s not even impressive enough to make a positive world impact in the 2-3 years it’s been publicly available.

It literally just won people two Nobel prizes

If I just hand wave all the good things and call them bullshit, AI is nothing more than bad things!

- Lemmy

Current "AI's" (LLMs) are only useful for art and non-fact based text. The two things people particularly do not need computers to do for them.

Llms fucking suck. But that's the worst kind of ai. It's just an autocorrect on steroids. But you know what a good ai is? The one that give an amino acid sequence predicts it's 3d structure. It's mind boggling. We can design personal protein robots with that kind of knowledge.

I would love AI. Still waiting for it. Probably 50 years away (if human society lasts that long).

What I hate is the term being yet another scientific term to get stolen and watered down by brainless capitalists so they can scam money out of other brainless capitalists.

they will automate all menial jobs, fire %90 of the workers and ask remaining %10 to oversee the AI automated tasks while also doing all other tasks which can not be automated. all so that shareholders can add some more billions on top of their existing stack of billions.

Today I learned about AI agents in the news and I just can think: Jesus. The example shown was of an AI agent using voice synthesis to bargain against a human agent about the fee for a night in some random hotel. In the news, the commenter talked about how the people could use this agents to get rid of annoying, reiterative, unwanted phone calls. Then I remembered about that night my in-laws were tricked to give their car away to robbers because they ~~thought~~ were told my sister in law was kidnapped, all through a phone call.

Yeah, AI agents will free us all from invasive megacorporations. /s

Why isn't anyone saying that AI and machine learning are (currently) the same thing? There's no such thing as "Artificial Intelligence" (yet)

Its more like intelligience is very poorly defined so a less controversial statement is that Artificial General Intelligience doesn't exist.

Also Generative AI such as LLMs are very very far from it, and machine learning in general haven't yielded much result in the persuit of sophonce and sapience.

Although they technically can pass a turing test as long as the turing test has a very short time limit and turing testers are chosen at random.

I work in an ML-adjacent field (CV) and I thought I'd add that AI and ML aren't quite the same thing. You can have non-learning based methods that fall under the field of AI - for instance, tree search methods can be pretty effective algorithms to define an agent for relatively simple games like checkers, and they don't require any learning whatsoever.

Normally, we say Deep Learning (the subfield of ML that relates to deep neural networks, including LLMs) is a subset of Machine Learning, which in turn is a subset of AI.

Like others have mentioned, AI is just a poorly defined term unfortunately, largely because intelligence isn't a well defined term either. In my undergrad we defined an AI system as a programmed system that has the capacity to do tasks that are considered to require intelligence. Obviously, this definition gets flaky since not everyone agrees on what tasks would be considered to require intelligence. This also has the problem where when the field solves a problem, people (including those in the field) tend to think "well, if we could solve it, surely it couldn't have really required intelligence" and then move the goal posts. We've seen that already with games like Chess and Go, as well as CV tasks like image recognition and object detection at super-human accuracy.

that heavily depends on how you define "intelligence". if you insist on "think, reason and behave like a human", then no, we don't have "Artificial Intelligence" yet (although there are plenty of people that would argue that we do). on the other hand if you consider the ability to play chess or go intelligence, the answer is different.