the_dunk_tank

It's the dunk tank.

This is where you come to post big-brained hot takes by chuds, libs, or even fellow leftists, and tear them to itty-bitty pieces with precision dunkstrikes.

Rule 1: All posts must include links to the subject matter, and no identifying information should be redacted.

Rule 2: If your source is a reactionary website, please use archive.is instead of linking directly.

Rule 3: No sectarianism.

Rule 4: TERF/SWERFs Not Welcome

Rule 5: No ableism of any kind (that includes stuff like libt*rd)

Rule 6: Do not post fellow hexbears.

Rule 7: Do not individually target other instances' admins or moderators.

Rule 8: The subject of a post cannot be low hanging fruit, that is comments/posts made by a private person that have low amount of upvotes/likes/views. Comments/Posts made on other instances that are accessible from hexbear are an exception to this.

Rule 9: if you post ironic rage bait im going to make a personal visit to your house to make sure you never make this mistake again

view the rest of the comments

I couldn't say, I don't really watch tv and have no basis of comparison. But i do want to take this opportunity to slag tbp - the "Dark Forest" is lazy thinking that reflects contemporary geopolitics, and not even that very well. It's tech-bro silliness. The problems of trying to blow up another planet across interstellar distances is so vast the author doesn't even try to make it seem feasible, he just made up a bunch of ridiculous magic excuse "technology" to make the plot work. And the plot is just "wot if nature was red in tooth and claw? Make's you think! Doesn't it?" Just reductionist social darwinist bullshit. "The optimal and indeed only survival action is to kill literally everyone you encounter the moment you encounter them!" Is silly bullshit. It doesn't work in nature, it doesn't work on Earth, and it is not meaningfully possible across interstellar distances with any technology that doesn't rely on magical thinking to function.

It's relatively easy to blow up another planet across interstellar distances, as long as you accept that 1) it will take thousands of years to accomplish, and 2) you will never possibly see any benefit from it.

I'm much more in line with Posadas on the motivations of aliens capable of interstellar travel; there really is no reason to take the Dark Forest seriously as a solution to the Fermi Paradox. The thing that annoys me the most about The Three Body Problem is that the aliens have "a wizard did it" levels of technology, but can't solve their survival problem in their own star system or find uninhabited star systems to terraform. It's all just "how do we justify interstellar invasion in a novel now that we know interstellar invasion is impossible and/or not worthwhile". Like you say, it's contrived.

I think the author would agree with this sentence lol, I've read nearly everything Liu Cixin has written that's been translated into English, he did say somewhere that sci-fi wouldn't be interesting if it was about what was the likely thing to happen. He said he doesn't believe the stories he writes are possible or likely, but just a way to explore different concepts.

In the books, the Trisolarians only funneled all their resources into developing those specific technologies after becoming aware of a habitable planet as close as 4 light years way, at the expense of many other developments that would have been beneficial for their ongoing society. It was a huge gamble for their civilization too.

What really maddens me about Dark Forest is that it absolutely is not easy. You're trying to build a machine with enough energy to glass a planet that you're somehow going to launch interstellar distances, with enough delta-v to catch another planet, and enough delta-v for course correction and terminal manuevering, and some kind of hardened control sytems that can survive decades, centuries, or millenia in the deep black, and some kind of power system that can run all of this presumably using magic bc idk what other power system could do all that.

Stembros talk about how they expect to find megastructures like dyson swarms lying around all over interstellar space totally ignoring basic materials science problems like "the materials to do that don't exist" and basic logistics problems like "why would you ever need or want that much energy?". They assume everyone is going to decide to strip-mine their solar system to build power generation facilities on a titanic scale, but they never ask what you would do with those facilities or why you would want them. It's a totally unconsidered faith that people will just do it bc it's what they think is cool. And they don't think about what they'd do with it, either. Like great, bro, now you've got a Kardahsev One economy. What are you going to do with the entire energy output of a star? And then it's just star trek babble bc for the most part there isn't anything to do with it. Maybe if you were trying to run some inconceivably large number of microwaves, but with simple interventions like birth control you won't arrive at a situation where you have inconceivable numbers of people who need inconceivable numbers of microwaves to heat up inconceivable numbers of burritos.

Soory, went a bit off course. But there seems to be this assumption with techbros that somehow these enormous challenges - how do you keep a space ship functional and intact over the course of years, decades, centuries, or millenia as you fling it at another planet? - will turn out to be trivial. It'll turn out that balling up some enormous amount of mass an energy and sticking a guidance system on it that will either function without repair or flawlessly self-repair for an indefinite period of time isn't a big deal.

And by the same notion, the "problem" of the Fermi Paradox is that obviously everyone else would want to do what I, a 20th century Euro, wants to do and conquer the entire universe by sending small robots to build small robots to build small robots forever, for no real reason, that would never benefit me or anyone else.

It's like "well, these things are technically feasible (debatable) so obviously people will do them!" Never stopping to consider if the things are practical or desirable or what they would accomplish.

Even asteroid mining and planetary colonisation right now is like that. Why? What are you even going to do with a billion extra tons of iron and platinum? We don't need it for anything, and anything you did build with it would probably be a complete ecological disaster. No one actually wants to live in space. It sucks up there, and it's all dead rock with nothing to see or do.

I sincerely believe that the way modern westerners look at space - "The Last Frontier" , a literal frontier for colonial and imperial expansion, is silly cultural bs that obscures not only how difficult space exploitation is, but how utterly useless it is.

People just grossly underestimate how much energy it would take to legitimately blow up a planet. Like, launching a moon-sized asteroid straight to the Earth isn't enough to blow up the Earth. And there's a flip side to this where people also underestimate how much aliens would have to do to end (vertebrate) life on Earth. All they have to do is nuke a critical mass of metropolitan areas for a massive firestorm that blankets the Earth with smoke to form. They don't really need sci-fantasy tech to end (vertebrate) life.

I feel like people assuage their very rational fears of nuclear holocaust by coming up with fanciful how-Earth-can-be-destroyed scenarios (what if a giant space station shoot a giant laser, what if a mass of self-replicating nanobots reach Earth, what if a parasitic species that can mind-control humans invade Earth, what if the Earth gets sucked into an artificial black hole) and assuring themselves that since these are all obviously fantasy, there's no real way for humans to be wiped out.

But even with nukes - You can't just shoot a bunch of hot off the shelf nukes hundreds of years through space. By the time the nuclear material arrived it'd be massively degraded. You'd have to shoot your entire nuclear weapon production infrastructure to the Sol system, then process the nuclear material and assemble the nukes when you were only a dozenish years away. So now you've got not just a couple of hundred or thousand missiles, but the facilities to manufacture and install the cores for all of those weapons, the materials necessary to manufacture all the unstable fuel for those weapons, all kinds of things. So many of these ideas are premised entirely on fanfciful hyper-tech or just completely forgetting that stuff falls apart over long periods of time being bombarded by gamma rays.

tbh Liu Cixin is basically a rationalist liberal. The whole serie is based on pseudoscience like ''game theory'' and realism geopolitics

Game theorists when people keep choosing cooperate

Game theorists really sound like some pseudo science shit. Kind of like how Rousseau made up ''social contract'' of hobbes ''leviathan'' when social collapse requires explanation for authoritarianism or state of exception.

spoiler

but tbh I can forgive him at the end of the Serie when it comes to a conclusion that game theory is stupidWith the spoiler...I was about to say lol, the conclusion shows how bullshit and pointless the dark forest is,. I think it's the whole point of the series.

Yeah that’s what i got from that. It’s basically that article about how there was a US think tank that gave the task to solve the nuclear tension problem during the Cold war to a bunch of STEMslords and humanities students. The former managed to nuke the earth 9/10 the latter managed to diffuse world tension.

But somehow the book made it about genders determinism. The whole serie has some weird obsession with gender binary which is problematic. I guess if you feel REALLY generous you can interpret it about how toxic masculinity logic thinking provoke the end of universe. Imho it’s just because Liu CiXin can’t write characters well

Yeah there is some weird hangups about women in the book that are just , probably has to do with the fact the writer is a STEMlord lol

, probably has to do with the fact the writer is a STEMlord lol

The dude is basically an effective altruist, he said in an interview some years ago about how Elon Musk gives him hope for humanity because tech will make everyone better (he also said that human dying is trivial because millions of people die anyway as history marches on).

The EA mindset and the rich people being the helmsman of progress is nothing new. Kim Stanley does that in his work too. Maybe that just sci-fi writer brainrot

Dude, I love those books for what they are, but the writing about Women is so weird. Literally hard times make strong men, strong men make good times, good times make soft twinks.

Supernova era also has some boomers stuff about how the youth are too dumb to face real life problems.

He also has a short story about how alien telling human to respect the elders.

So yeah the dude is just a boomer

See, I don't know that, because the first book (in translation to English at any rate) is so mid I only finished it to see what people were on about. I have the same issue with Mass Effect. "Actually the third game adressed all the racism that was uncriticially presented as good and normal with no qualifiers in the first game!" Like great, i'm really happy for them, but i didn't bother with those installments of the story because the first installment was enough to put me off.

When it's taken out of its original context, understanding the behavior of perfectly rational risk-minimizers in systems of rules, it usually is pseudo science shit.

Which doesn't actually exist anyway

Exactly! It's a mathematical fiction!

Are you telling me that happiness, democracy, freedom index is all fake?

The username fits

Is there a context in which the concept of a "perfectly rational risk minimizer" is a useful construct to explore?

Absolutely! It's very useful in communications theory. I'm thinking specifically of MIMO networks, in which game theory can be useful to find resource (ie power) allocations that allow the best power/channel capacity tradeoff. Here, the agents are nodes in a communication network, and the risk is how much power they spend to put signals into the network. Game theory is important in this kind of comm. problem because the optimal policy for any individual node's power allocation depends on the strategies of the nodes they're communicating with.

That makes sense, thank you.

That's my assessment of game theory. There are probably hidden depths I don't understand but from the outside it looks like "i am going to write the rules of a " game" that proves things i already believe are natural laws and i will ignore or dismiss any external complications that make my game bogus and make me look like a silly ass"

Even at our worst, humanity never tried to kill all of a new group that they encountered. Trade and diplomacy (admittedly with a wide range if violence underpinning it) has always been the human M.O. with inly a handful of very rare exceptions.

Even European genocide of Native Americans started off with intentions of trade. In fact, it was really only British colonization, carried on by their American and Canadian descendents, that opnelt tried to exterminate the natives. French colonization (outside of Caribbean slave plantations) was mainly focused in trade networks centered around frontier outposts. Spain came in a toppled the existing power structures in Meso and Southern America and ruled over the deposed empires.

Encounters with advanced alien races wouldn't result in the intentional destruction of humanity, but instead would likely end up with total upheaval of our society, and the powers that be are terrified of that.

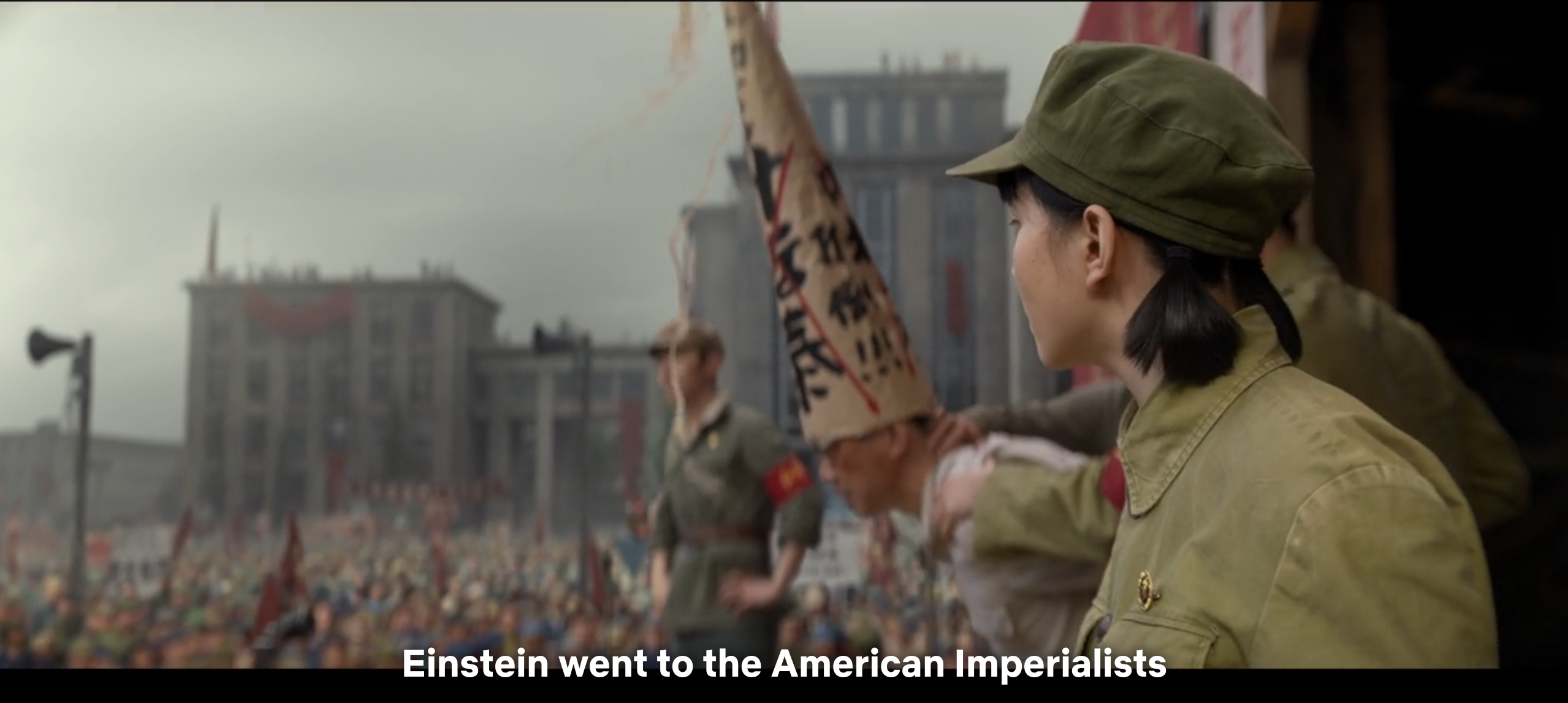

Like Yun Tianming’s fairy tales, Liu Cixin’s story is coded with multiple layers of meaning.

Most Westerners will probably only grasp the most primitive layer of the story - a sci-fi fairy tale.

But there is certainly a deeper layer of meaning that only Chinese with the collective memory of Chinese history could understand. The whole Three-Body Problem series including the Dark Forest is a critique about the naivety of the Chinese civilization and their oscillating attitudes toward their former oppressors (Imperial Japan, and now the US) over time.

Remember, the book was written in the early 2000s, in the wake of when the US-China relations was at its worst ever from 1999-2001.

I mean yeah I got that, but no one collapsed the Pacific in to a one-dimensional line. "The dark forest", as a concept, is silly whether you try to apply it to interstellar contact or to 20th century geopolitics.

It’s not something that can be proven or disproved, since we cannot feasibly observe the effects of the Dark Forest at the scale of the universe anyway. Nonetheless, it is a compelling theory.

The theory as put forth in the book series is predicated upon the assumptions that “communication between civilization light years apart necessary leads to a chain of suspicion” and “technological explosion can lead to exponential advancements”.

This is fundamentally different from the geopolitical tensions that take place on Earth. Even with shared physiology, near-instant communication, translatable languages and cultures that are shared across civilizations, humanity still went through the horrors of wars that could have led to the extinction of our species.

Now, imagine two entirely different civilizations that are light years apart, where communications could take years to transpire, where the fundamental biology of the species of both civilizations could be so far apart that attempts to understand one another are rendered extremely difficult, and where, given enough time, the lesser civilizations could even overtake the better ones in a few short generations in terms of technological advancements.

Think, for example, two human civilizations that are a number of light years apart. How can we even predict what kind of human society the inhabitants of the other planet would develop into, in, say 200 years? Can we even predict what kind of political system it would be? Even if we had made encounter with a benign civilization, in 200 years, due to various external factors such as climate change, that benign civilization could easily have been replaced by a fascist one, with malign intentions. And that’s considering that they are humans like us and think similarly in many ways.

Now, consider two completely alien species with far less biological and cultural similarities, where we cannot even begin to comprehend the kind of thoughts the other species could have. How do we know if they tell the truth or if they are being deceptive? We can do this to other humans to a certain extent, because of our shared biological and cultural contexts, but with a completely alien species? Even disregarding technological advancements, how do we even know what kind of societal and cultural changes their species could undergo in a number of generations, considering we have so few reference points (perhaps even none) to contemplate with?

The question, then, is what would be the better strategy for survival? One that exposes your own position, or one that hides from the others about your own existence?

Note that the Dark Forest (at least in the book series) does not say that every civilization is out there to kill one another. It simply says that even if only 1% of the civilizations think like this, and because of technological explosiveness, they could obtain weaponry that can end solar systems and galaxies with relatively low cost, then they would not be averse to use it.

Here on Earth, we know that ants could never overtake us on a technological level. But what if, those ants (across the grand scale of universe) could become even more advanced than us in just a few hundred years? For some civilizations, they’d prefer to stamp out those ants before they even made it to the next stage of development.

Even in our own history, as soon as the atomic bomb had been developed in the US, there had been people at the upper ranks crazy enough to want to use it to nuke the other countries. MacArthur famously wanted to nuke China during the Korean War. What stopped them was the USSR attaining nuclear parity by 1949, merely 4 years after the first atomic bomb was used to devastate Japanese cities. But between species with much larger technological disparity, we cannot easily say that it would be the same for them. After all, how many plants and animals and various organisms have humans forced into extinction without even sparing a moment of thought for them?

So, even if 99% of the civilizations in the universe is rational, they still have to fear the last 1%, and along the grand scale of the universe, exposing your own position bears a much larger risk of getting yourself wiped out by external forces, than hiding your presence from the rest of the universe.

As such, civilizations that have developed the “hiding genes” would simply fare better over time, than the civilizations who haven’t developed that. This doesn’t mean that the latter cannot exist - after all, what do 1000 years of advanced civilization mean on the grand scale of the universe? You could flourish for several thousands of years, establish contact and trade with other space civilizations and still get wiped out eventually (this was actually described in the books). None of this disprove the Dark Forest, it simply means we cannot observe them and as such it will remain a conjecture.

But then the question becomes: do all the other civilizations also think the same? What if even just 1% of spare-faring civilizations spread across the entire universe believe in the Dark Forest? Since we cannot possibly know, we still have to defend ourselves against that, and so we are forced to act as if it were real to begin with.

At the end of the day all it amounts to is "what if there was an evil wizard who could wave his wand and blow us up?!"

It's silly nonesense. How are you going to "hide" from the imaginary wizards who can blow up galaxies? Whatever silly star trek space magic they have is operating on levels of energy manipulation that are impossible per physics as we understand them. You're basically picking a fight against an atheist thought experiment used to convey how silly theism is. "What if Russel's Teapot and the Invisible Pink Unicorn got together and decided to beat us up? What would we do?" You wouldn't do anything, because there's no serious reason to believe those entities do or could exist, and if they did there still wouldn't be anything to be done about them.

Even the assumption that you could take any deliberate action to hide from them. If we're the ant hive risking destruction in this grand cosmic play you're saying we should try to avoid detection from imaginary enemies armed with things as incomprehensible to us as satellites and electron microscopes and gravity wave detectors are to ants. Since we're trying to protect ourselves from imaginary space wizards with impossible powers one can play along and suggest that the imaginary space wizards have equally potent methods of detection - crystal balls, scrying pools, magic mirrors, and so forth. And these methods of detection would render our attempts to hide from them just as pointless as our attempts to combat them in open battle.

It's all silly. Maybe there's a very large man in the sky who will be cross with us and punish us for being naughty. Maybe there is! So what? We can't see him, interact with him, communicate with him, or kill him, so why worry about him? We can't even guess what method he might use to perceive us.

Have you read Alastair Reynold's Revelation Space? It tackles Dark Forest, and all of these questions you're raising, from what I found to be a much more creative, imaginative, and thought provoking perspective. It's just as full of silly space magic as TBP, but the space magic is at least somewhat grounded with some rules that keep things modestly comprehensible, the characters are much better written, and the premise is more interesting than just "what if game theorists weren't silly asses and were right?"

I hadn't realized how much Dark Forest is the same kind of silly bs as "Roko's Basilisk" until you laid it out here. It's just another tech-bro formulation of Pascal's Wager. It's not even a thought experiment because the answer is always "there's no useful action we could take so we shouldn't waste time worrying about it." That's the whole thing with true unknown unknowns and outside context problems - you cannot, by definition, prepare for them.

We could at least conceivably work on systems to defend against rocks flying around in space. Rocks flying around in space actually exist, they're a known problem, they're not magic, we can see them (or we could) and we could build machines to nudge them away from the planet if we really wanted to.

But the Dark Forest concept isn't meaningfully distinct from sitting around saying "what if God was real and he was really, really pissed at us?"