Welcome to the FOSAI Nexus!

(v0.0.1 - Summer 2023 Edition)

The goal of this knowledge nexus is to act as a link hub for software, applications, tools, and projects that are all FOSS (free open-source software) designed for AI (FOSAI).

If you haven't already, I recommend bookmarking this page. It is designed to be periodically updated in new versions I release throughout the year. This is due to the rapid rate in which this field is advancing. Breakthroughs are happening weekly. I will try to keep up through the seasons while including links to each sequential nexus post - but it's best to bookmark this since it will be the start of the content series, giving you access to all future nexus posts as I release them.

If you see something here missing that should be added, let me know. I don't have visibility over everything. I would love your help making this nexus better. Like I said in my welcome message, I am no expert in this field, but I teach myself what I can to distill it in ways I find interesting to share with others.

I hope this helps you unblock your workflow or project and empowers you to explore the wonders of emerging artificial intelligence.

Consider subscribing to /c/FOSAI if you found any of this interesting. I do my best to make sure you stay in the know with the most important updates to all things free open-source AI.

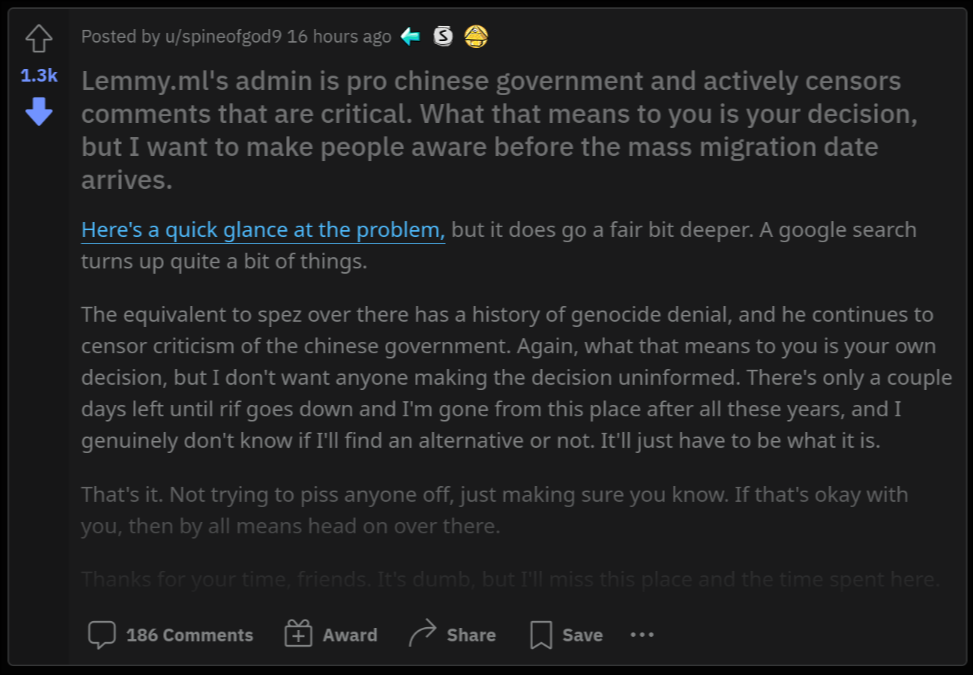

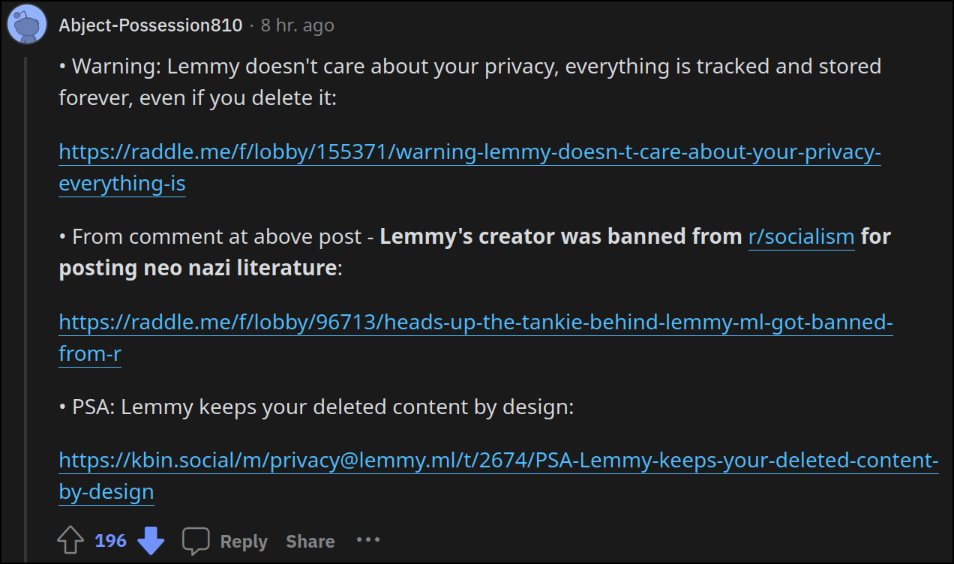

Find Us On Lemmy!

[email protected]

Fediverse Resources

Lemmy

Large Language Model Hub

Download Models

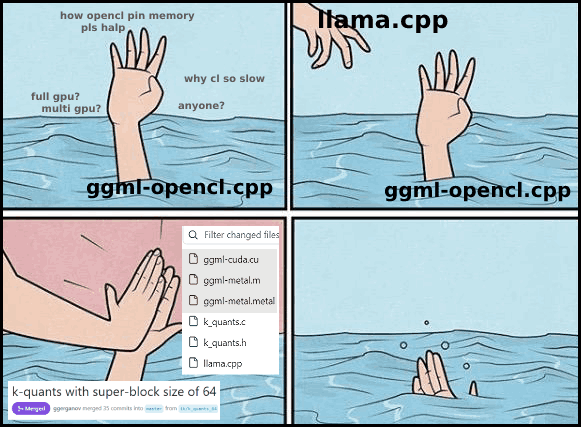

text-generation-webui - a big community favorite gradio web UI by oobabooga designed for running almost any free open-source and large language models downloaded off of HuggingFace which can be (but not limited to) models like LLaMA, llama.cpp, GPT-J, Pythia, OPT, and many others. Its goal is to become the AUTOMATIC1111/stable-diffusion-webui of text generation. It is highly compatible with many formats.

A standalone Python/C++/CUDA implementation of Llama for use with 4-bit GPTQ weights, designed to be fast and memory-efficient on modern GPUs.

Open-source assistant-style large language models that run locally on your CPU. GPT4All is an ecosystem to train and deploy powerful and customized large language models that run locally on consumer-grade processors.

The original branch of software SillyTavern was forked from. This chat interface offers very similar functionalities but has less cross-client compatibilities with other chat and API interfaces (compared to SillyTavern).

Developer-friendly, Multi-API (KoboldAI/CPP, Horde, NovelAI, Ooba, OpenAI+proxies, Poe, WindowAI(Claude!)), Horde SD, System TTS, WorldInfo (lorebooks), customizable UI, auto-translate, and more prompt options than you'd ever want or need. Optional Extras server for more SD/TTS options + ChromaDB/Summarize. Based on a fork of TavernAI 1.2.8

A self-contained distributable from Concedo that exposes llama.cpp function bindings, allowing it to be used via a simulated Kobold API endpoint. What does it mean? You get llama.cpp with a fancy UI, persistent stories, editing tools, save formats, memory, world info, author's note, characters, scenarios, and everything Kobold and Kobold Lite have to offer. In a tiny package around 20 MB in size, excluding model weights.

This is a browser-based front-end for AI-assisted writing with multiple local & remote AI models. It offers the standard array of tools, including Memory, Author's Note, World Info, Save & Load, adjustable AI settings, formatting options, and the ability to import existing AI Dungeon adventures. You can also turn on Adventure mode and play the game like AI Dungeon Unleashed.

h2oGPT is a large language model (LLM) fine-tuning framework and chatbot UI with document(s) question-answer capabilities. Documents help to ground LLMs against hallucinations by providing them context relevant to the instruction. h2oGPT is fully permissive Apache V2 open-source project for 100% private and secure use of LLMs and document embeddings for document question-answer.

Image Diffusion Hub

Download Models

Stable Diffusion is a text-to-image diffusion model capable of generating photo-realistic and stylized images. This is the free alternative to MidJourney. It is rumored that MidJourney originates from a version of Stable Diffusion that is highly modified, tuned, then made proprietary.

With Stable Diffusion XL, you can create descriptive images with shorter prompts and generate words within images. The model is a significant advancement in image generation capabilities, offering enhanced image composition and face generation that results in stunning visuals and realistic aesthetics.

A powerful and modular stable diffusion GUI and backend. This new and powerful UI will let you design and execute advanced stable diffusion pipelines using a graph/nodes/flowchart-based interface.

ControlNet is a neural network structure to control diffusion models by adding extra conditions. This is a very popular and powerful extension to add to AUTOMATIC111's stable-diffusion-webui.

An all-in-one solution for adding Temporal Stability to a Stable Diffusion Render via an automatic1111 extension. You must install FFMPEG to path before running this.

Bring your paintings to animated life. This software can be used in conjunction with StableDiffusion + ControlNet + TemporalKit workflows.

A TemporalKit alternative to produce video effects and animation styling.

Training & Education

LLMs

Diffusers

Bonus Recommendations

AI Business Startup Kit

LLM Learning Material from the Developer of SuperHOT (kaiokendev):

Here are some resources to help with learning LLMs:

Andrej Karpathy’s GPT from scratch

Huggingface’s NLP Course

And for training specifically:

Alpaca LoRA

Vicuna

Community training guide

Of course for papers, I recommend reading anything on arXiv’s CS - Computation & Language that looks interesting to you: https://arxiv.org/list/cs.CL/recent.

Support Developers!

Please consider donating, subscribing to, or buying a coffee for any of the major community developers advancing Free Open-Source Artificial Intelligence.

If you're a developer in this space and would like to have your information added here (or changed), please don't hesitate to message me!

TheBloke

Oobabooga

Eric Hartford

kaiokendev

Major FOSAI News & Breakthroughs

Looking for other open-source projects based on these technologies? Consider checking out this GitHub Repo List I made based on stars I have collected throughout the last year or so.