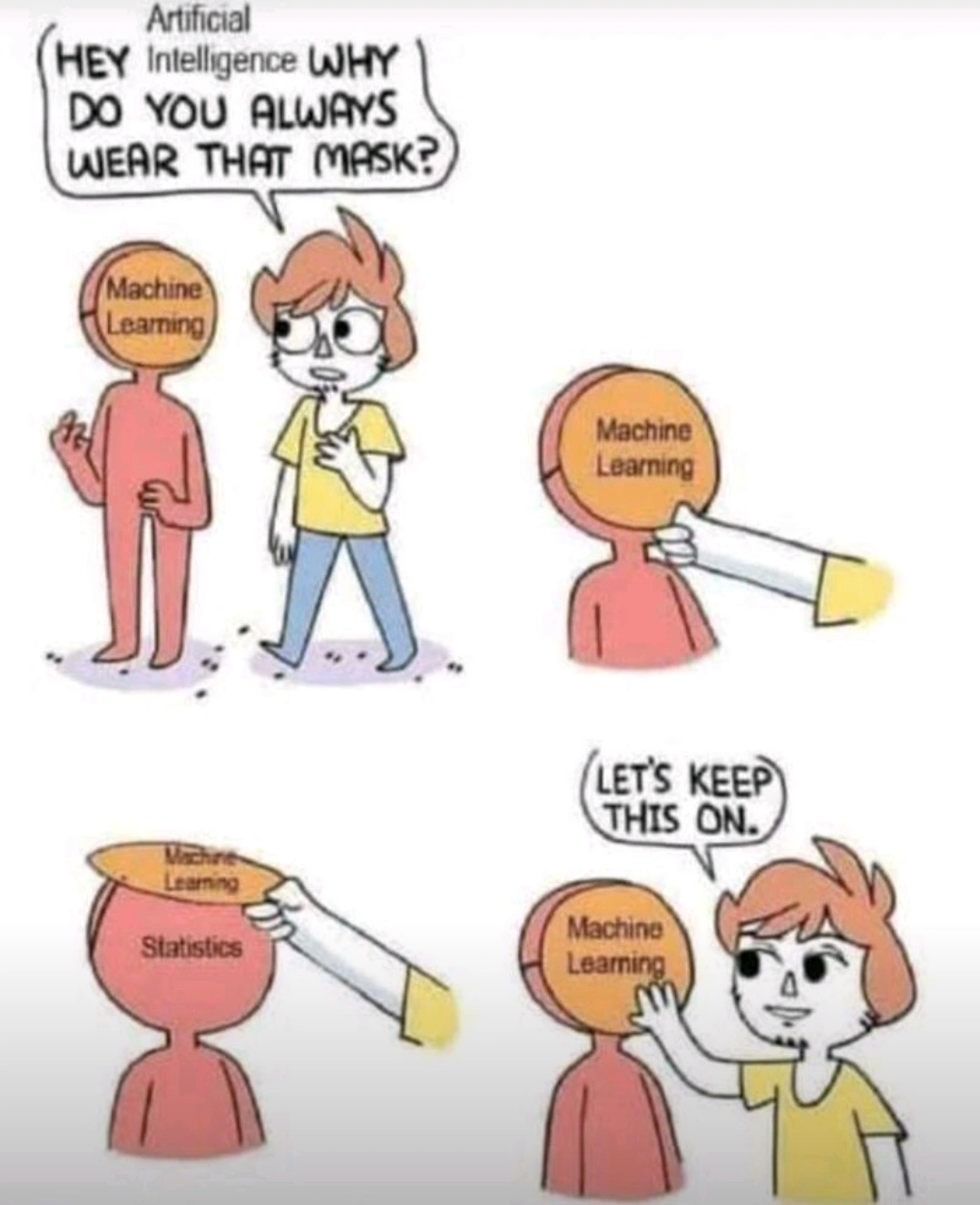

The meme would work just the same with the "machine learning" label replaced with "human cognition."

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

Have to say that I love how this idea congealed into "popular fact" as soon as peoples paychecks started relying on massive investor buy in to LLMs.

I have a hard time believing that anyone truly convinced that humans operate as stochastic parrots or statistical analysis engines has any significant experience interacting with others human beings.

Less dismissively, are there any studies that actually support this concept?

Speaking as someone whose professional life depends on an understanding of human thoughts, feelings and sensations, I can't help but have an opinion on this.

To offer an illustrative example

When I'm writing feedback for my students, which is a repetitive task with individual elements, it's original and different every time.

And yet, anyone reading it would soon learn to recognise my style same as they could learn to recognise someone else's or how many people have learned to spot text written by AI already.

I think it's fair to say that this is because we do have a similar system for creating text especially in response to a given prompt, just like these things called AI. This is why people who read a lot develop their writing skills and style.

But, really significant, that's not all I have. There's so much more than that going on in a person.

So you're both right in a way I'd say. This is how humans develop their individual style of expression, through data collection and stochastic methods, happening outside of awareness. As you suggest, just because humans can do this doesn't mean the two structures are the same.

Idk. There’s something going on in how humans learn which is probably fundamentally different from current ML models.

Sure, humans learn from observing their environments, but they generally don’t need millions of examples to figure something out. They’ve got some kind of heuristics or other ways of learning things that lets them understand many things after seeing them just a few times or even once.

Most of the progress in ML models in recent years has been the discovery that you can get massive improvements with current models by just feeding them more and data. Essentially brute force. But there’s a limit to that, either because there might be a theoretical point where the gains stop, or the more practical issue of only having so much data and compute resources.

There’s almost certainly going to need to be some kind of breakthrough before we’re able to get meaningful further than we are now, let alone matching up to human cognition.

At least, that’s how I understand it from the classes I took in grad school. I’m not an expert by any means.

I would say that what humans do to learn has some elements of some machine learning approaches (Naive Bayes classifier comes to mind) on an unconscious level, but humans have a wild mix of different approaches to learning and even a single human employs many ways of capturing knowledge, and also, the imperfect and messy ways that humans capture and store knowledge is a critical feature of humanness.

I think we have to at least add the capacity to create links that were not learned through reasoning.

The big difference between people and LLMs is that an LLM is static. It goes through a learning (training) phase as a singular event. Then going forward it's locked into that state with no additional learning.

A person is constantly learning. Every moment of every second we have a ton of input feeding into our brains as well as a feedback loop within the mind itself. This creates an incredibly unique system that has never yet been replicated by computers. It makes our brains a dynamic engine as opposed to the static and locked state of an LLM.

I'd love to hear about any studies explaining the mechanism of human cognition.

Right now it's looking pretty neural-net-like to me. That's kind of where we got the idea for neural nets from in the first place.

It's not specifically related, but biological neurons and artificial neurons are quite different in how they function. Neural nets are a crude approximation of the biological version. Doesn't mean they can't solve similar problems or achieve similar levels of cognition , just that about the only similarity they have is "network of input/output things".

At every step of modern computing people have thought that the human brain looks like the latest new thing. This is no different.

If by "human cognition" you mean "tens of millions of improvised people manually checking and labeling images and text so that the AI can pretend to exist," then yes.

If you mean "it's a living, thinking being," then no.

My dude it's math all the way down. Brains are not magic.

There's a lot we understand about the brain, but there is so much more we dont understand about the brain and "awareness" in general. It may not be magic, but it certainly isnt 100% understood.

Eh. Even heat is a statistical phenomenon, at some reference frame or another. I've developed model-dependent apathy.

Bayesian purist cope and seeth.

Most machine learning is closer to universal function approximation via autodifferentiation. Backpropagation just lets you create numerical models with insane parameter dimensionality.

I like your funny words, magic man.

erm, in english, please !

Universal function approximation - neural networks.

Auto-differentiation - algorithmic calculation of partial derivatives (aka gradients)

Backpropagation - when using a neural network (or most ML algorithms actually), you find the difference between model prediction and original labels. And the difference is sent back as gradients (of the loss function)

Parameter dimensionality - the “neurons” in the neural network, ie, the weight matrices.

If thats your argument, its worse than Statistics imo. Atleast statistics have solid theorems and proofs (albeit in very controlled distributions). All DL has right now is a bunch of papers published most often by large tech companies which may/may not work for the problem you’re working on.

Universal function approximation theorem is pretty dope tho. Im not saying ML isn’t interesting, some part of it is but most of it is meh. It’s fine.

iT's JuSt StAtIsTiCs

But it is, and it always has been. Absurdly complexly layered statistics, calculated faster than a human could.

This whole "we can't explain how it works" is bullshit from software engineers too lazy to unwind the emergent behavior caused by their code.

I agree with your first paragraph, but unwinding that emergent behavior really can be impossible. It's not just a matter of taking spaghetti code and deciphering it, ML usually works by generating weights in something like a decision tree, neural network, or statistical model.

Assigning any sort of human logic to why particular weights ended up where they are is educated guesswork at best.

But it is, and it always has been. Absurdly complexly layered statistics, calculated faster than a human could.

Well sure, but as someone else said even heat is statistics. Saying "ML is just statistics" is so reductionist as to be meaningless. Heat is just statistics. Biology is just physics. Forests are just trees.

It’s like saying a jet engine is essentially just a wheel and axle rotating really fast. I mean, it is, but it’s shaped in such a way that it’s far more useful than just a wheel.

It's totally statistics, but that second paragraph really isn't how it works at all. You don't "code" neural networks the way you code up website or game. There's no "if (userAskedForThis) {DoThis()}". All the coding you do in neutral networks is to define a model and training process, but that's it; Before training that behavior is completely random.

The neural network engineer isn't directly coding up behavior. They're architecting the model (random weights by default), setting up an environment (training and evaluation datasets, tweaking some training parameters), and letting the models weights be trained or "fit" to the data. It's behavior isn't designed, the virtual environment that it evolved in was. Bigger, cleaner datasets, model architectures suited for the data, and an appropriate number of training iterations (epochs) can improve results, but they'll never be perfect, just an approximation.

This whole "we can't explain how it works" is bullshit

Mostly it’s just millions of combinations of y = k*x + m with y = max(0, x) between. You don’t need more than high school algebra to understand the building blocks.

What we can’t explain is why it works so well. It’s difficult to understand how the information is propagated through all the different pathways. There are some ideas, but it’s not fully understood.

Its curve fitting

But it's fitting to millions of sets in hundreds of dimensions.

or stolen data

**AND stolen data

Ftfy

Yes, this is much better because it reveals the LLMs are laundering bias from a corpus of dimwittery.

This is exactly how I explain the AI (ie what the current AI buzzword refers to) tob common folk.

And what that means in terms of use cases.

When you indiscriminately take human outputs (knowledge? opinions? excrements?) as an input, an average is just a shitty approximation of pleb opinion.

My biggest issue is that a lot of physical models for natural phenomena are being solved using deep learning, and I am not sure how that helps deepen understanding of the natural world. I am for DL solutions, but maybe the DL solutions would benefit from being explainable in some form. For example, it’s kinda old but I really like all the work around gradcam and its successors https://arxiv.org/abs/1610.02391

How is it different than using numerical methods to find solutions to problems for which analytic solutions are difficult, infeasible, or simply impossible to solve.

Any tool that helps us understand our universe. All models suck. Some of them are useful nevertheless.

I admit my bias to the problem space though: I’m an AI engineer—classically trained in physics and engineering though.

In my experience, papers which propose numerical solutions cover in great detail the methodology (which relates to some underlying physical phenomena), and also explain boundary conditions to their solutions. In ML/DL papers, they tend to go over the network architecture in great detail as the network construction is the methodology. But the problem I think is that there’s a disconnect going from raw data to features to outputs. I think physics informed ML models are trying to close this gap somewhat.