this post was submitted on 08 Jul 2024

528 points (97.1% liked)

Science Memes

11021 readers

3653 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

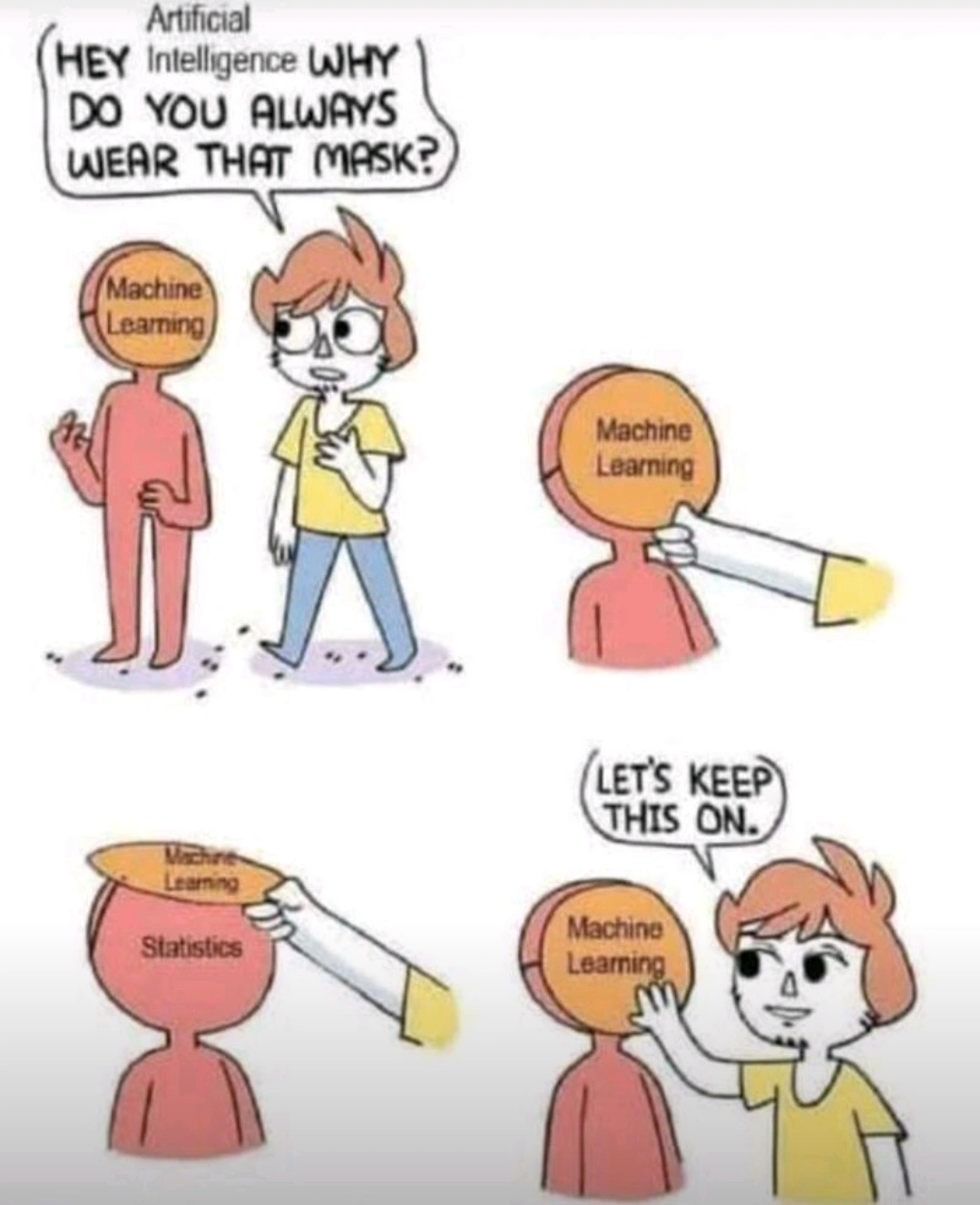

I wouldn't say it is statistics, statistics is much more precise in its calculation of uncertanties. AI depends more on calculus, or automated differentiation, which is also cool but not statistics.

Just because you don't know what the uncertainties are doesn't mean they're not there.

Most optimization problems can trivially be turned into a statistics problem.

Sure if you mean turning your error function into some sort of likelihood by employing probability distributions that relate to your error function.

But that is only the beginning. Apart from maybe Bayesian neural networks, I haven't seen much if any work on stuff like confidence intervals for your weights or prediction intervals for the response (that being said I am only a casual follower on this topic).

One of the better uses I've seen involved using this perspective to turn what was effectively a maximum likelihood fit into a full Gaussian model to make the predicted probabilities more meaningful.

Not that it really matters much how the perspective is used, what's important is that it's there.