~~Kendrick~~ Zitron dropped - its mainly focusing on Prabhakar Raghavan's recent kicking upstairs, and Google's bleak future.

Main highlight was this snippet:

I am hypothesizing here, but I think that Google is desperate, and that its earnings on October 30th are likely to make the street a little worried. The medium-to-long-term prognosis is likely even worse. As the Wall Street Journal notes, Google's ad business is expected to dip below 50% market share in the US in the next year for the first time in more than a decade, and Google's gratuitous monopoly over search (and likely ads) is coming to an end. It’s more than likely that Google sees AI as fundamental to its future growth and relevance.

Okay, personal thoughts:

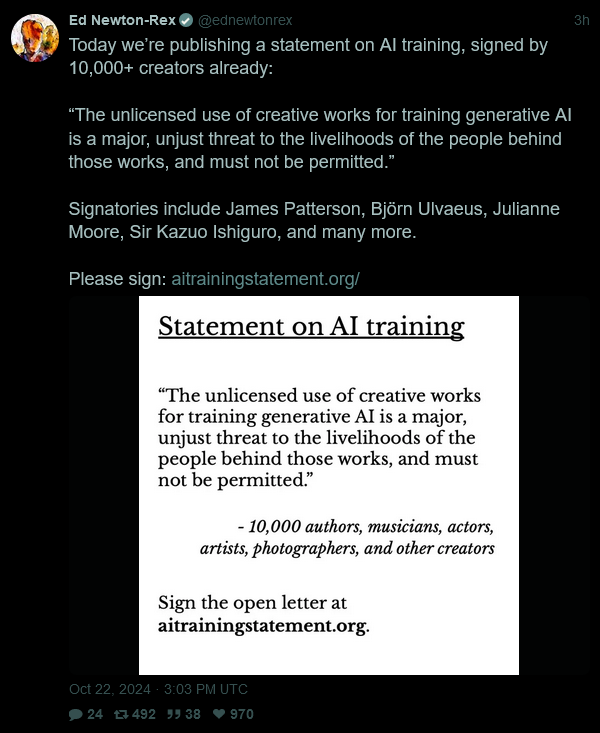

This is just gut instinct, but it feels like generative AI is going to end up becoming a legal minefield once the many lawsuits facing OpenAI and others wrap up. Between the likes of Nashville's ELVIS Act, the federal bill for the COPIED Act, the solid case for denying Fair Use protection, and the absolute flood of lawsuits coming down on the AI industry, I suspect gen-AI will come to be seen by would-be investors as legally risky at best and a lawsuit generator at worst.

Also, Musk would've been much better off commissioning someone to make the image he wanted rather than grabbing a screencap Aicon openly said he was not allowed to use and laundering it through some autoplag. Moral and legal issues aside, it would have given something much less ugly to look at.