BlueMonday1984

The proposal itself does still assume that AI scrapers are being run by decent human beings with functioning moral compasses, which is why I feel its inadequate.

This take might be overly harsh on AI/tech as a whole, but at this point I've run out of patience regarding this bubble and see no reason to believe anyone in the AI space is a decent human being, at least for the time being.

Not a sneer, but a mildly interesting open letter:

A specification for those who want content searchable on search engines, but not used for machine learning.

The basic idea is effectively an extension of robots.txt which attempts to resolve the issue by providing a means to politely ask AI crawlers not to scrape your stuff.

Personally, I don't expect this to ever get off the ground or see much usage - this proposal is entirely reliant on trusting that AI bros/companies will respect people's wishes and avoid scraping shit without people's permission.

Between OpenAI publicly wiping their asses with robots.txt, Perplexity lying about user agents to steal people's work, and the fact a lot of people's work got stolen before anyone even had the opportunity to say "no", the trust necessary for this shit to see any public use is entirely gone, and likely has been for a while.

(I completely agree, btw, with the observation about how the AI industry has made tech synonymous with “monstrous assholes” in a non-trivial chunk of public consciousness.)

Thinking about it, that's probably gonna have some long-lasting aftereffects - I'm not sure exactly what shape those aftereffects will take, but I imagine they're gonna be quite major.

I'd personally consider that sufficient grounds to accuse Proton of stealing its customers' data.

At the (miniscule) risk of sounding unnecessarily harsh on tech, any customer data that gets sent to company servers without the customer's explicit, uncoerced permission should be considered stolen.

Study shock! AI hinders productivity and makes working worse [Thomas Claburn, The Register]

Personal opinion: c'mon bubble

"Positive is that if you didn't tell someone it was GenAI, they might not notice!"

Nah, they'd be able to immediately tell from just how fucking garbage it is

Sounds like they'd be right at home on awful (barring the rats, of course :P)

ChatGPT's new search feature hasn't even launched and already its shitting the bed

The sneers are writing themselves

AI companies work around this by paying human classifiers in poor but English-speaking countries to generate new data. Since the classifiers are poor but not stupid, they augment their low piecework income by using other AIs to do the training for them. See, AIs can save workers labor after all.

On the one hand, you'd think the AI companies would check to make sure they aren't using AI themselves and damaging their models.

On the other hand, AI companies are being run by some of the laziest and stupidest people alive, and are probably just rubber-stamping everything no matter how blatantly garbage the results are.

Not a sneer, but still a damn good piece on AI from Brian Merchant:

The great and justified rage over using AI to automate the arts

(Personal sidenote: Tech's public image is almost certainly gonna take a nosedive as a result of this AI bubble. "We made a machine with the express purpose of putting artists out of business" isn't a business case, its the setup for a shitty teen dystopian novel.)

(Fuck, now I wanna try and predict how the AI bubble bursting will play out...)

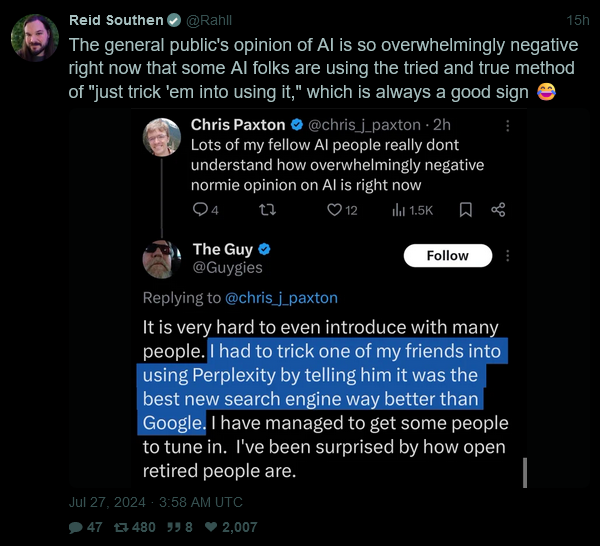

Its a surprisingly good complement to OP Chris Paxton's Tweet about "normie opinion on AI", given it shows why said "normie opinion" is so resoundingly negative.

I've made some brief nods to how the AI bubble is rapidly souring public perception of tech (here and here), but it really feels like AI has, to quote @datarama, "made tech synonymous with “monstrous assholes” in a non-trivial chunk of public consciousness".

I feel like I should collect my thoughts on that front - I could probably make an interesting post out of it.