The y-axis is absolute eye bleach. Also implying that an "AI researcher" has the effective compute of 10^6 smart high schoolers. What the fuck are these chodes smoking?

BigMuffin69

AH THE TSP MOVIE IS SO FUN :)

btw, as a shill for big MIP, I am compelled to share this site which has solutions for real world TSPs!

No, they never address this. And as someone who personally works on large scale optimization problems for a living, I do think it's difficult for the public to understand, that no, a 10000 IQ super machine will not be able to just "solve these problems" in a nano second like Yud thinks. And it's not like well, the super machine will just avoid having to solve them. No. NP hard problems are fucking everywhere. (Fun fact, for many problems of interest, even approximating the solution to a given accuracy is NP-hard, so heuristics don't even help.)

I've often found myself frustrated that more computer scientist who should know better simply do not address this point. If verifying solutions is exponentially easier than coming up with them for many difficult problems (all signs point to yes), and if a super intelligent entity actually did exist (I mean does a SAT solver count as a super intelligent entity?), it would probably be EASY to control, since it would have to spend eons and massive amounts of energy coming up with its WORLD_DOMINATION_PLAN.exe, but you wouldn't be able to hide a super computer doing this massive calculation, and someone running the machine seeing it output TURN ALL HUMANS INTO PAPER CLIPS, would say, 'ah, we are missing a constraint here, it thinks that this optimization problem is unbounded' <- this happens literally all the time in practice. Not the world domination part, but a poorly defined optimization problem that is unbounded. But again, it's easy to check that the solution is nonsense.

I know Francois Chollet (THE GOAT) has talked about how there are no unending exponentials and the faster growth the faster you hit constraints IRL (running out of data, running out of chips, running out of energy, etc... ) and I've definitely heard professional shit poster Pedro Domingos explicitly discuss how NP-hardness strongly implies EA/LW type thinking is straight up fantasy, but it's a short list of people who I can think of off the top of my head who have discussed this.

Edit: bizarrely, one person who I didn't mention who has gone down this line of thinking is Illya Sutskever; however, he has come to some frankly... uh... strange conclusions -> the only reason to explain the successful performance of ML is to conclude that they are Kolmogorov minimizers, i.e., by optimizing for loss over a training set, you are doing compression which done optimally is solving an undecidable problem. Nice theory. Definitely not motivated by bad sci-fi mysticism imbued with pure distilled hopium. But from my arm-chair psychologist POV, it seems he implicitly acknowledges for his fantasy to come true, he needs to escape the limitations of Turing Machines, so he has to somehow shoehorn a method for hyper computation into Turing Machines. Smh, this is the kind of behavior reserved for aging physicist, amirite lads? Yet in 2023, it seemed like the whole world was succumbing to this gas lighting. He was giving this lecture to auditoriums filled with tech bro shilling this line of thinking to thunderous applause. I have olde CS prof friends who were like, don't we literally have mountains of evidence this is straight up crazy talk? Like you can train an ANN to perform addition, and if you can look me straight in the eyes and say the absolute mess of weights that results looks anything like a Kolmogorov minimizer then I know you are trying to sell me a bag of shit.

Truly I say unto you , it is easier for a camel to pass through the eye of a needle than it is to convince a 57 year old man who thinks he's still pulling off that leather jacket to wear a condom. (Tegmark 19:24, KJ Version)

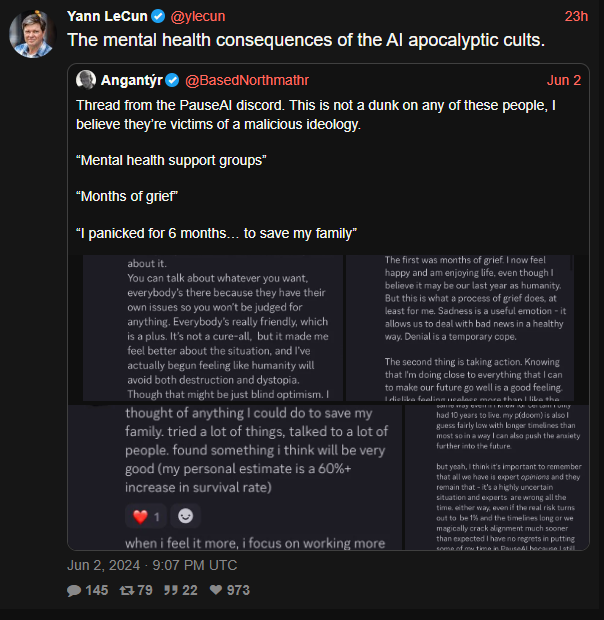

Not a sneer, just a feelsbadman.jpg b.c. I know peeps who have been sucked into this "its all Joever.png mentality", (myself included for various we live in hell reasons, honestly I never recovered after my cousin explained to me what nukes were while playing in the sandbox at 3)

The sneerworthy content comes later:

1st) Rats never fail to impress with appeal to authority fallacy, but 2nd) the authority in question is max totally unbiased not a member of the extinction cult and definitely not pushing crank theories for decades fuckin' tegmark roflmaou

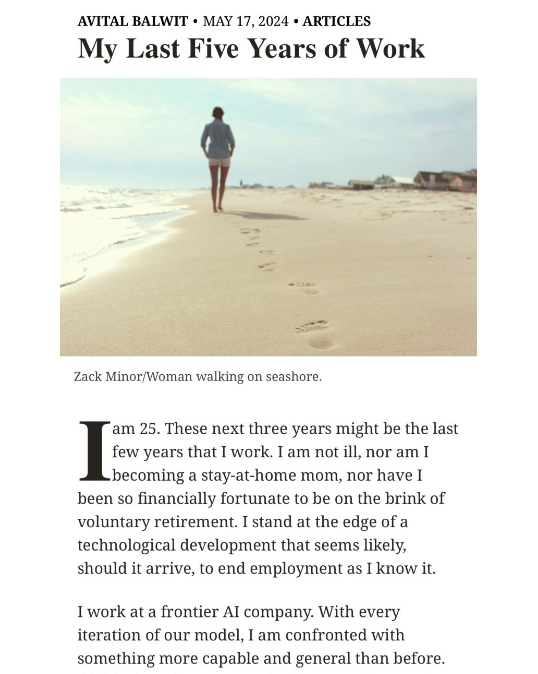

This gem from 25 year old Avital Balwit the Chief of Staff at Anthropic and researcher of "transformative AI at Oxford’s Future of Humanity Institute" discussing the end of labour as she knows it. She continues:

"The general reaction to language models among knowledge workers is one of denial. They grasp at the ever diminishing number of places where such models still struggle, rather than noticing the ever-growing range of tasks where they have reached or passed human level. [wherein I define human level from my human level reasoning benchmark that I have overfitted my model to by feeding it the test set] Many will point out that AI systems are not yet writing award-winning books, let alone patenting inventions. But most of us also don’t do these things. "

Ah yes, even though the synthetic text machine has failed to achieve a basic understanding of the world generation after generation, it has been able to produce ever larger volumes of synthetic text! The people who point out that it still fails basic arithmetic tasks are the ones who are in denial, the god machine is nigh!

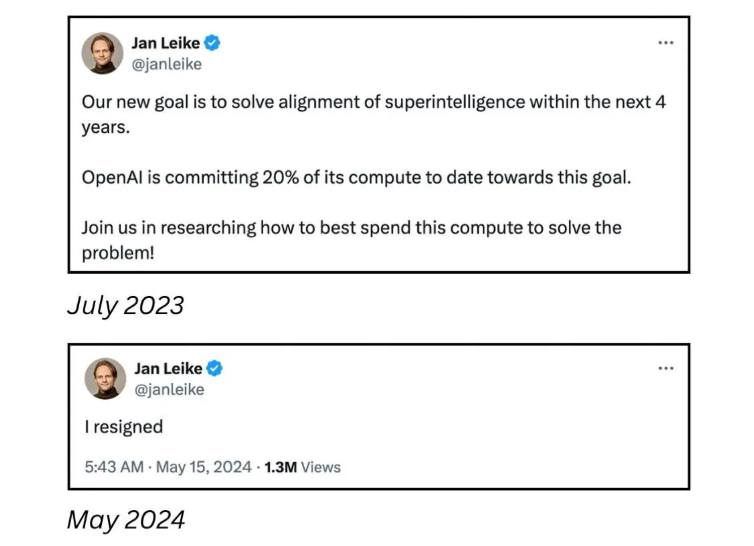

Bonus sneer:

Ironically, the first job to go the way of the dodo was researcher at FHI, so I understand why she's trying to get ahead of the fallout of losing her job as chief Dario Amodei wrangler at OpenAI2: electric boogaloo.

Idk, I'm still workshopping this one.

🐍

I don't understand why the wife does not simply consume her husband, a parenting style I've developed from observing the noble praying mantis in the wild.

Ugh, this post has me tilted- if your utility function is

max sum log(spending on fun stuff at time t ) * p(alive_t) s.t. cash at time t = savings_{t-1}*r + work_t - spending_t,

etc.,

There's no fucking way the optimal solution is to blow all your money now, because the disutility of living in poverty for decades is so high. It's the same reason people pay for insurance, no one expects their house is going to burn down tomorrow, but protecting yourself against the risk is the 100% correct decision.

Idk, they are the Rationalist^{tm} so what the hell do I know.

It never ceases to amaze me watching these tech bros breathlessly hyping the next revolutionary product for it to be a huge nothing burger. Like that's it? You gave gpt an image to text input + and a text to speech app? Dawg, I've been watching Joe Biden & Trump play Fortnite for 5 years at this point, text to speech just doesn't hit the same anymore.

I mean my god, at least some hardcore engineering went in to making the migraine inducing apple vision pro. If this is the best card in oai's deck, I would be panicking as an investor.

Like a model trained on its own outputs, Geoff has drank his own Kool-Aid and completely decohered.

I got you homie

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀ ⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀