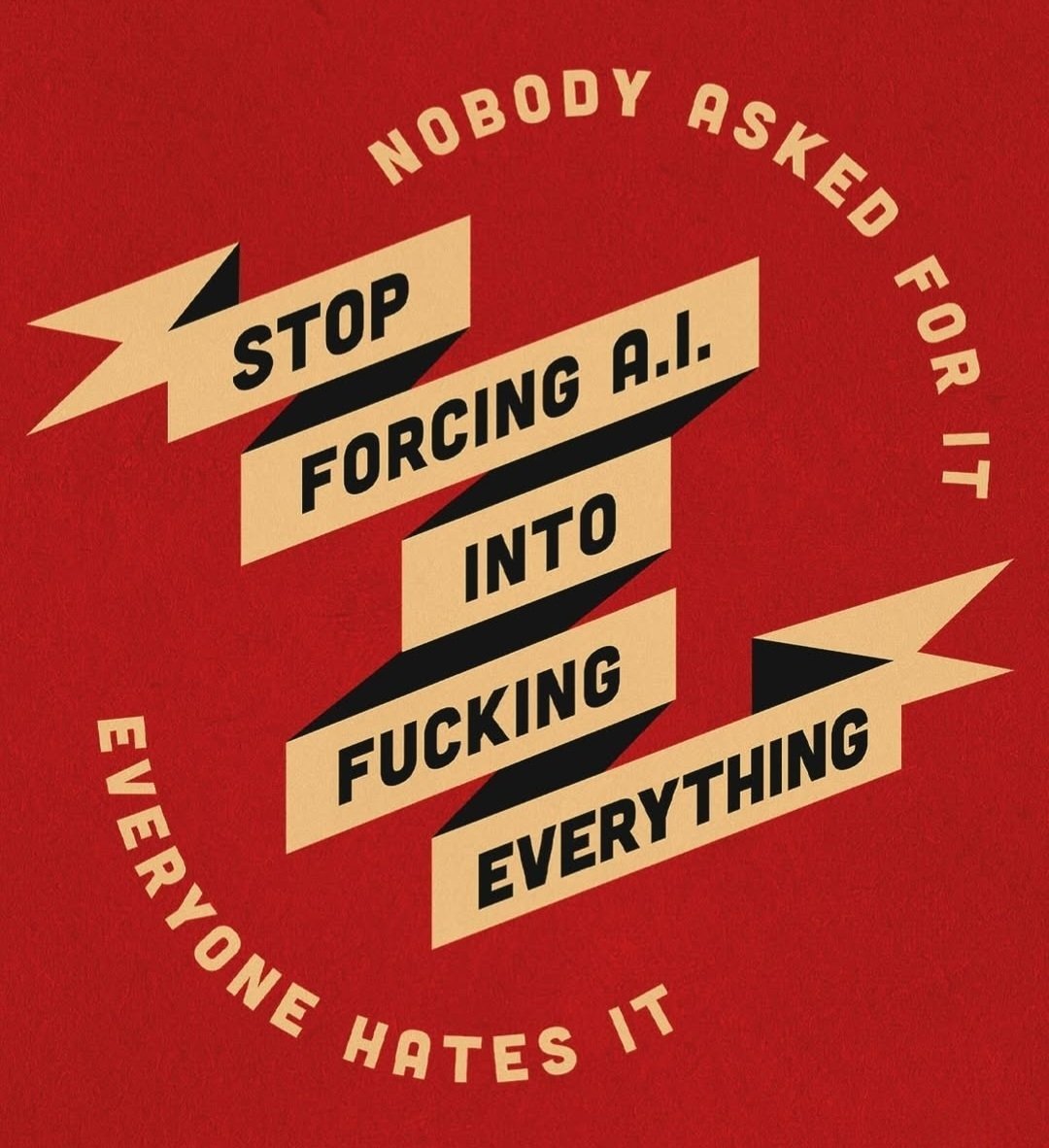

But the companies must posture that their on the cutting edge! Even if they only put the letters "AI" on the box of a rice cooker without changing the rice cooker

memes

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to [email protected]

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

Sister communities

- [email protected] : Star Trek memes, chat and shitposts

- [email protected] : Lemmy Shitposts, anything and everything goes.

- [email protected] : Linux themed memes

- [email protected] : for those who love comic stories.

When it comes to the marketing teams in such companies, I wonder what the ratio is between true believers and "this is stupid but if it spikes the numbers next quarter that will benefit me.”

"AI" isn't ready for any type of general consumer market and that's painfully obvious to anyone even remotely aware of how it's developing, including investors.

...but the cost benefit analysis on being first-to-market with anything even remotely close to the universal applicability of AI is so absolutely insanely on the "benefit" side that it's essentially worth any conceivable risk, because the benefit if you get it right is essentially infinite.

It won't ever stop

One of the leading sources of enshitification.

I hate what AI has become and is being used for, i strongly believe that it could have been used way more ethically, solid example being Perplexity, it shows you the sources being used at the top, being the first thing you see when it give a response. The opposite of this is everything else. Even Gemini, despite it being rather useful in day to day life when I need a quick answer to something when I'm not in the position to hold my phone, like driving, doing dishes, or yard work with my ear buds in

Forcing AI into everything maximizes efficiency, automates repetitive tasks, and unlocks insights from vast data sets that humans can't process as effectively. It enhances personalization in services, driving innovation and improving user experiences across industries. However, thoughtful integration is critical to avoid ethical pitfalls, maintain human oversight, and ensure meaningful, responsible use of AI.

Its not really targeting the consumers here, its just to impress investors with it

Please tell this my simulation group members. I told them, but they won't listen.

Plot twist: this image was generated by AI /j

The Imperium of Man got this right.

yes, we need to ask for AI's consent first!

oh wait, i think i read it wrong

I don't blame them, they have to compensate their organic intelligence somehow.

I like this typography, but I don't like somebody pretending they represent everybody.