In other news, Hindenburg Research just put out a truly damning report on Roblox, aptly titled "Roblox: Inflated Key Metrics For Wall Street And A Pedophile Hellscape For Kids", and the markets have responded.

TechTakes

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

I think Zuckeberg has been saying the silent part out loud since day one.

People just submitted it.

I don't know why.

They "trust me"

Dumb fucks

I trained a neural network on all the ways I've said that I hate these people, and it screamed in eldritch spectra before collapsing into silence.

As always with plagiarism, regardless of what they say they always, always, always act out of a complete disregard for the value of whatever they're ripping off.

Can't really say I'm surprised that Mr Facebook takes this attitude. His whole fortune is built on the belief that aggregating and hosting content is more valuable than creating it

hmm, I meant to link that when I saw it, guess I forgot. whoops :D

but yeah, entirely unsurprising from the guy who literally started by harvesting a pile of data and then building a commercial service off it. facebook and parentco should be ended, his assets taken for public good

speaking of the Godot engine, here’s a layered sneer from the Cruelty Squad developer (via Mastodon):

image description

a post from Consumer Softproducts, the studio behind Cruelty Squad:

weve read the room and have now successfully removed AI from cruelty squad. each enemy is now controlled in almost real time by an employee in a low labor cost country

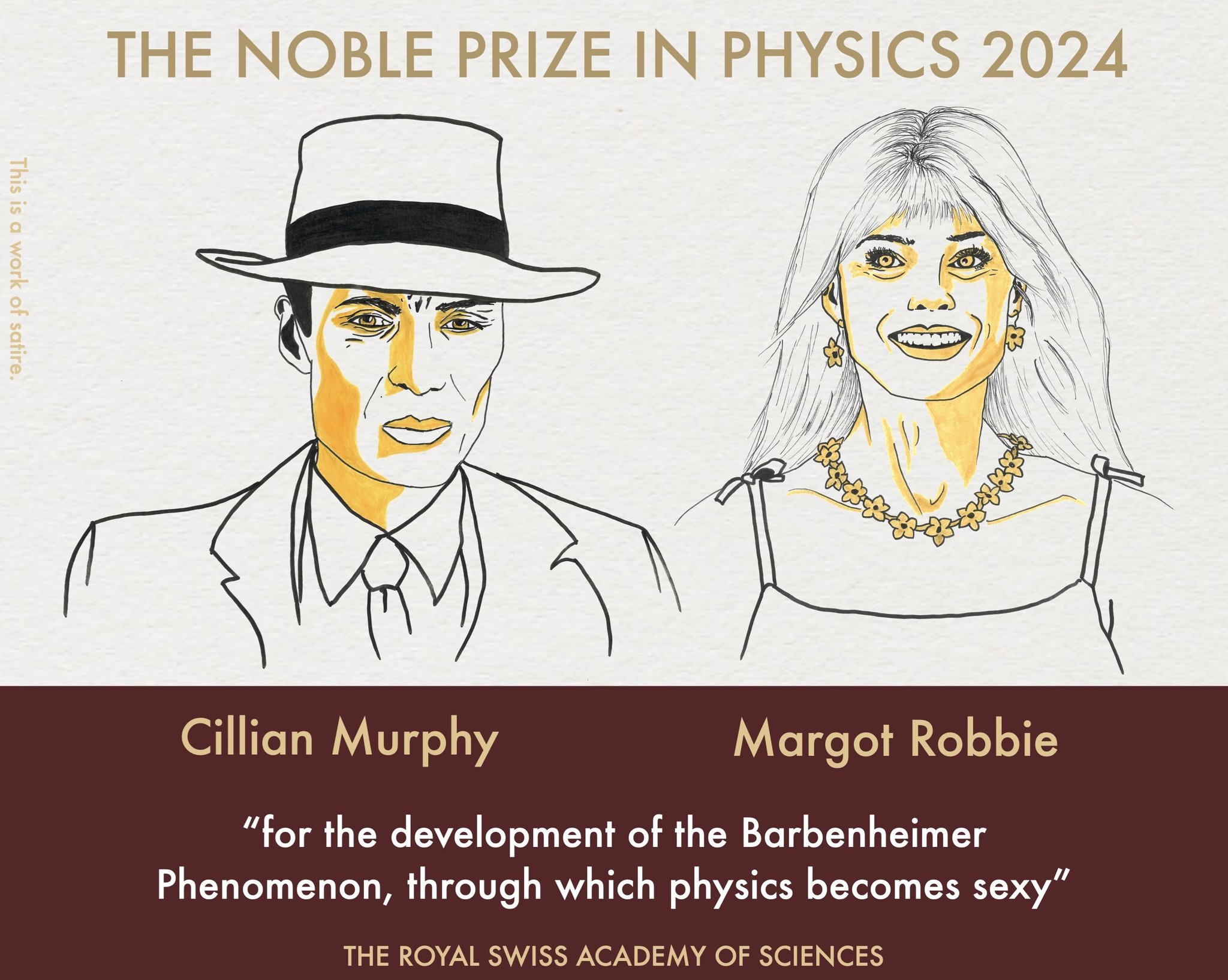

i wouldn't want to sound like I'm running down Hinton's work on neural networks, it's the foundational tool of much of what's called "AI", certainly of ML

but uh, it's comp sci which is applied mathematics

how does this rate a physics Nobel??

They're reeeaallly leaning into the fact that some of the math involved is also used in statistical physics. And, OK, we could have an academic debate about how the boundaries of fields are drawn and the extent to which the divisions between them are cultural conventions. But the more important thing is that the Nobel Prize is a bad institution.

https://www.bbc.com/news/articles/c62r02z75jyo

It’s going to be like the Industrial Revolution - but instead of our physical capabilities, it’s going to exceed our intellectual capabilities ... but I worry that the overall consequences of this might be systems that are more intelligent than us that might eventually take control

😩

Don't know how much this fits the community, as you use a lot of terms I'm not inherently familiar with (is there a "welcome guide" of some sort somewhere I missed).

Anyway, Wikipedia moderators are now realizing that LLMs are causing problems for them, but they are very careful to not smack the beehive:

The purpose of this project is not to restrict or ban the use of AI in articles, but to verify that its output is acceptable and constructive, and to fix or remove it otherwise.

I just... don't have words for how bad this is going to go. How much work this will inevitably be. At least we'll get a real world example of just how many guardrails are actually needed to make LLM text "work" for this sort of use case, where neutrality, truth, and cited sources are important (at least on paper).

I hope some people watch this closely, I'm sure there's going to be some gold in this mess.

The purpose of this project is not to restrict or ban the use of AI in articles, but to verify that its output is acceptable and constructive, and to fix or remove it otherwise.

Wikipedia's mod team definitely haven't realised it yet, but this part is pretty much a de facto ban on using AI. AI is incapable of producing output that would be acceptable for a Wikipedia article - in basically every instance, its getting nuked.

Welcome to the club. They say a shared suffering is only half the suffering.

This was discussed in last week's Stubsack, but I don't think we mind talking about talking the same thing twice. I, for one, do not look forward to browsing Wikipedia exclusively through pre-2024 archived versions, so I hope (with some pessimism) their disapponintingly milquetoast stance works out.

Reading a bit of the old Reddit sneerclub can help understand some of the Awful vernacular, but otherwise it's as much of a lurkmoar as any other online circlejerk. The old guard keep referencing cringe techbros and TESCREALs I've never heard of while I still can't remember which Scott A we're talking about in which thread.

Scott Computers is married and a father but still writes like an incel and fundamentally can't believe that anyone interested in computer science or physics might think in a different way than he does. Dilbert Scott is an incredibly divorced man. Scott Adderall is the leader of the beige tribe.

the mozilla PR campaign to convince everyone that advertising is the lifeblood of commerce and that this is perfectly fine and good (and that everyone should just accept their viewpoint) continues

We need to stare it straight in the eyes and try to fix it

try, you say? and what's your plan for when you fail, but you've lost all your values in service of the attempt?

For this, we owe our community an apology for not engaging and communicating our vision effectively. Mozilla is only Mozilla if we share our thinking, engage people along the way, and incorporate that feedback into our efforts to help reform the ecosystem.

are you fucking kidding me? "we can only be who we are if we maybe sorta listen to you while we keep doing what we wanted to do"? seriously?

the purestrain corporate non-apology that is “we should have communicated our vision effectively” when your entire community is telling you in no uncertain terms to give up on that vision because it’s a terrible idea nobody wants

How do we ensure that privacy is not a privilege of the few but a fundamental right available to everyone? These are significant and enduring questions that have no single answer. But, for right now on the internet of today, a big part of the answer is online advertising.

How do we ensure that traffic safety is not a privilege of the few but a fundamental right available to everyone? A big part of the answer is drunk driving.

How do we prevent huge segments of the world from being priced out of access through paywalls?

Based Mozilla. Abolish landlords. Obliterate the commodity form. Full luxury gay communism now.

PC Gamer put out a pro-AI piece recently - unsurprisingly, Twitter tore it apart pretty publicly:

I could only find one positive response in the replies, and that one is getting torn to shreds as well:

I did also find a quote-tweet calling the current AI bubble an "anti-art period of time", which has been doing pretty damn well:

Against my better judgment, I'm whipping out another sidenote:

With the general flood of AI slop on the Internet (a slop-nami as I've taken to calling it), and the quasi-realistic style most of it takes, I expect we're gonna see photorealistic art/visuals take a major decline in popularity/cultural cachet, with an attendant boom in abstract/surreal/stylised visuals

On the popularity front, any artist producing something photorealistic will struggle to avoid blending in with the slop-nami, whilst more overtly stylised pieces stand out all the more starkly.

On the "cultural cachet" front, I can see photorealistic visuals becoming seen as a form of "techno-kitsch" - a form of "anti-art" which suggests a lack of artistic vision/direction on its creators' part, if not a total lack of artistic merit.

Proton continuing to do pointlessly stupid and self-destructive things:

https://infosec.exchange/@malwaretech/113257047424000919

They're basically admitting they didn't pay an influencer to spread misinformation about public wifi in order to sell VPN products, they just stole her likeness, used her photo, and attributed completely made up quote to her.

But it was a joke guys! We did a satire! I’m totally certain I know what satire is!

The logical conclusion of normalizing "Social Media Manager" as a role in companies is that as they get better at their jobs and become more believable, the average corporate communication will trend towards 13-year old edgy shitposter. God I feel old.

every time I get mail “even a 🤏 teensy bit like this! 🤩” from serious-company I have actual financial dealings with, a part of me dies inside

and it’s getting more goddamn frequent too

Another upcoming train wreck to add to your busy schedule: O’Reilly (the tech book publisher) is apparently going to be doing ai-translated versions of past works. Not everyone is entirely happy about this. I wonder how much human oversight will be involved in the process.

https://www.linkedin.com/posts/parisba_publications-activity-7249244992496361472-4pLj

translate technically fiddly instructions of the type where people have trouble spotting mistakes, with patterned noise generators. what could go wrong

Earlier today, the Internet Archive suffered a DDoS attack, which has now been claimed by the BlackMeta hacktivist group, who says they will be conducting additional attacks.

Hacktivist group? The fuck can you claim to be an activist for if your target is the Internet Archive?

Training my militia of revolutionary freedom fighters to attack homeless shelters, soup kitchens, nature preserves, libraries, and children's playgrounds.

Someone shared this website with me at work and now I am sharing the horror with you all: https://www.syntheticusers.com/

Reduce your time-to-insight

I do not think that word means what they think it means.

Emily Bender devoted a whole episode of Mystery AI Hype Theater 3000 to this.

I have nothing to add, save the screaming.

Synthetic Users uses the power of LLMs to generate users that have very high Synthetic Organic Parity. We start by generating a personality profile for each user, very much like a reptilian brain around which we reconstruct its personality. It’s a reconstruction because we are relying on the billions of parameters that LLMs have at their disposal.

They could've worded this so many other ways

But I suppose creepyness is a selling point these days

Nobody likes Bryan Johnson’s breakfast at the Network School

A cafe run by immortality-obsessed multi-millionaire Bryan Johnson is reportedly struggling to attract customers with students at the crypto-funded Network School in Singapore preferring the hotel’s breakfast buffet over “bunny food.”

I did not expect to be tricked into reading about the nighttime erections of the man with the most severe midlife crisis in the world.

he has 80% fewer gray hairs, representing a “31-year age reversal”

According to Wikipedia this guy is 47. Sorry about your hair as a teenager I guess? I hope the early graying didn't lead to any long term self-esteem issues.

Any mild pushback to the claims of LLM companies sure bring out the promptfondlers on lobste.rs

https://lobste.rs/s/qcppwf/llms_don_t_do_formal_reasoning_is_huge

Plenty of agreement, but also a lot of "what is reasoning, really" and "humans are dumb too, so it's not so surprisingly GenAIs are too!". This is sure a solid foundation for multi-billion startups, yes sirree.

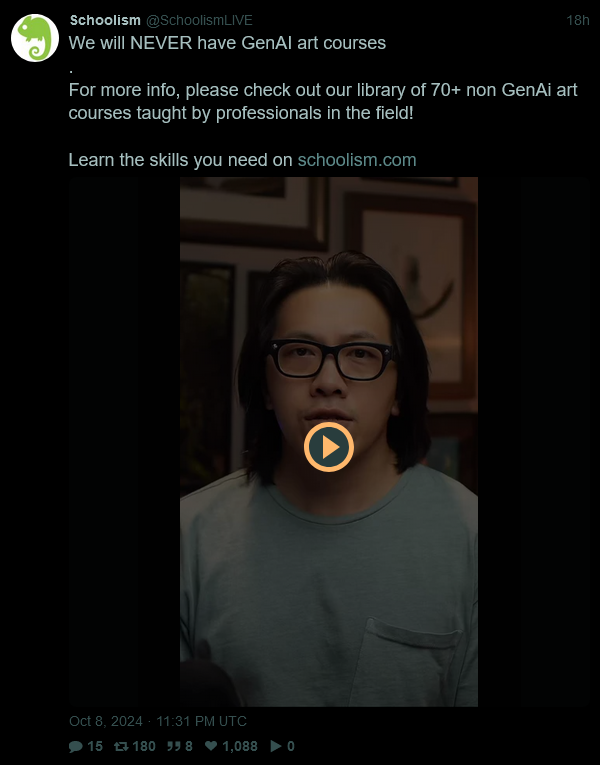

Online art school Schoolism publicly sneers at AI art, gets standing ovation

And now, a quick sidenote:

This is gut instinct, but I'm starting to get the feeling this AI bubble's gonna destroy the concept of artificial intelligence as we know it.

Mainly because of the slop-nami and the AI industry's repeated failures to solve hallucinations - both of those, I feel, have built an image of AI as inherently incapable of humanlike intelligence/creativity (let alone Superintelligence^tm^), no matter how many server farms you build or oceans of water you boil.

Additionally, I suspect that working on/with AI, or supporting it in any capacity, is becoming increasingly viewed as a major red flag - a "tech asshole signifier" to quote Baldur Bjarnason for the bajillionth time.

For a specific example, the major controversy that swirled around "Scooby Doo, Where Are You? In... SPRINGTRAPPED!" over its use of AI voices would be my pick.

Eagan Tilghman, the man behind the ~~slaughter~~ animation, may have been a random indie animator, who made Springtrapped on a shoestring budget and with zero intention of making even a cent off it, but all those mitigating circumstances didn't save the poor bastard from getting raked over the coals anyway. If that isn't a bad sign for the future of AI as a concept, I don't know what is.

Just something I found in the wild (r/machine learning): Please point me in the right direction for further exploring my line of thinking in AI alignment

I'm not a researcher or working in AI or anything, but ...

you don't say

New piece from Brian Merchant: Yes, the striking dockworkers were Luddites. And they won.

Pulling out a specific paragraph here (bolding mine):

I was glad to see some in the press recognizing this, which shows something of a sea change is underfoot; outlets like the Washington Post, CNN, and even Inc. Magazine all published pieces sympathizing with the longshoremen besieged by automation—and advised workers worried about AI to pay attention. “Dockworkers are waging a battle against automation,” the CNN headline noted, “The rest of us may want to take notes.” That feeling that many more jobs might be vulnerable to automation by AI is perhaps opening up new pathways to solidarity, new alliances.

To add my thoughts, those feelings likely aren't just that many more jobs are at risk than people thought, but that AI is primarily, if not exclusively, threatening the jobs people want to do (art, poetry, that sorta shit), and leaving the dangerous/boring jobs mostly untouched - effectively the exact opposite of the future the general public wants AI to bring them.

And on the subject of AI: strava is adding ai analytics. The press release is pretty waffly, as it would appear that they’d decided to add ai before actually working out what they’d do with it so, uh, it’ll help analyse the reams of fairly useless statistics that strava computes about you and, um, help celebrate your milestones?

Not a sneer, but I saw an article that was basically an extremely goddamn long list of forum recommendations and it gave me a warm and fuzzy feeling inside.