Big assumption that we all use AI. Some of us don't as per company policy.

AI

Artificial intelligence (AI) is intelligence demonstrated by machines, unlike the natural intelligence displayed by humans and animals, which involves consciousness and emotionality. The distinction between the former and the latter categories is often revealed by the acronym chosen.

I use the ChatGPT Google Sheets extension pretty much every day to create tables, charts, and all kinds of lists for various clients and projects. The results are excellent, and it saves me a significant amount of time.

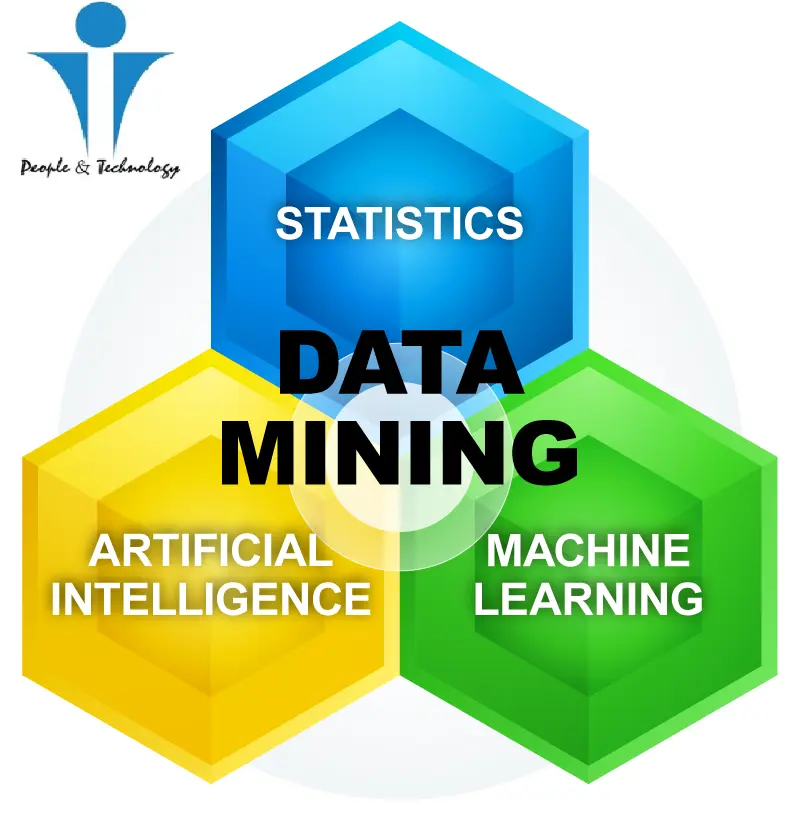

I don't understand the image. Is that supposed to be a Venn diagram?

Anyway, to answer your question, I use GitHub Copilot for all of my coding work, and ChatGPT here and there throughout the week. They've both been great productivity boosters. Sometimes, it also gets hoisted onto me when I don't want it. Like when trying to talk to customer service, or Notion trying to put words in my mouth when I accidentally hit the wrong keyboard shortcut.

I refuse to contribute to the acceleration of climate change. Especially due to fivolity such as the mass production of fast fashion style memes. Used once, and thrown away forever. I will not permit the continued violation of our rights used to create llms. Big tech and the government's use of mass survailence and ai have solidified our position in the distopian present. So no. I don't use ai. But I do recycle. And at least I think I'm fun at parties...

Constantly, unfortunately.

I work in Cyber Security and you can't swing a Cat-5 'o Nine Tails without hitting some vendor talking up the "AI tools" in their products. Some of them are kinda OK. Mostly, this is language models providing relevant documentation or code snippets, stuff which was previously found by a bit of googling. The problem is that AI has been stuffed into network and system analysis, looking for anomalous activity. And every single one of those models is complete shit. While they do find anomalies, it's mostly because they alert of so much stuff, generating so many false positives, that they get one right by blind chance. If you want to make money on a model, sell it to a security vendor. Those of us who have to deal with the tools will hate you, but CEOs and CISOs are eating that shit up right now. If you want to make something actually useful, make a model which identifies and tunes out false positives from other models.