The things that I do while I'm meant to be sleeping is apparently break Reddthat! Sorry folks. Here's a postmortem on the issue.

What happened

I wanted a way in which I could view the metrics of our lemmy instance. This is a feature you need to turn on called Prometheus Metrics. This isn't a new thing for me, and I tackle these issues at $job daily.

After reading the documentation here on prometheus, it looks like it is a compile time-flag. Meaning the pre-build packages that are generated do not contain metric endpoints.

No worries I thought, I was already building the lemmy application and container successfully as part of getting our video support.

So I built a new container with the correct build flags, turned on my dev server again, deployed, and tested extensively.

We now have interesting metrics for easy diagnosis. Tested posting comments, as well as uploading Images as well as testing out the new Video upload!

So we've done our best and deploy to prod.

ohno.webm

As you know from the other side... it didn't go to well.

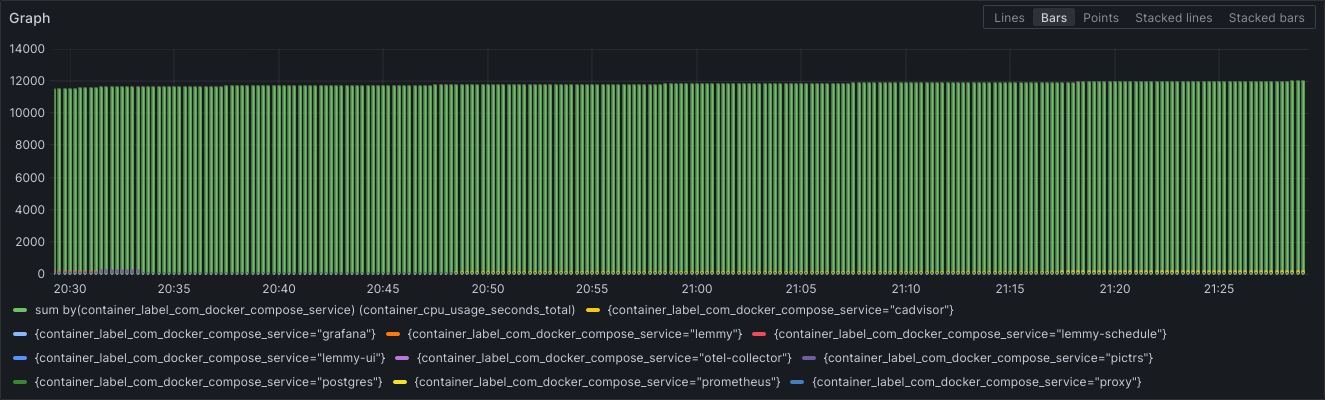

After 2 minutes of huge latencies my phone lights up with a billion notifications so I know something isn't working... Initial indications showed super high cpu usage of the Lemmy containers (the one I newly created!) That was the first minor outage / high latencies around 630-7:00 UTC and we "fixed" it by rolling back the version, confirming everything was back to normal. I went and had a bite to eat.

not_again.mp4

Fool-heartedly I attempted it again, with: clearing out build cache, directly building on the server, more testing, more builds, more testing, and more testing.

I opened up 50 terminals and basically DOS'd the (my) dev server with GET and POST requests as an attempt to trigger some form of high enough load that it would cause the testing to be validated and I'd figure out where I had gone wrong in the first place.

Nothing would trigger the issue, so I continued along with my validation and "confirmed everything was working".

Final Issue

So we are deploying to production again but we know it might not go so well so we are doing everything we can to minimise the issues.

At this point we've completely ditched our video upload patch and gone with a completely blank 0.18.4 with the metrics build flag to minimise the possible issues.

NOPE

At this point I accept the downtime and attempt to work on a solution while in fire. (I would have added the everything-is-fine meme but we've already got a few here)

Things we know:

- My lemmy app build is not actioning requests fast enough

- The error relates to a Timeout happening inside lemmy which I assumed was to postgres because there was about ~15 postgres processes in the process of performing "Authentication" (this should be INSTANT)

- postgres logs show an error with concurrency.

- but this error doesnt happen with the dev's packaged app

- postgres isn't picking up the changes to the custom configuration

- was this always the case?!?!?!? are all the Lemmy admins stupid? Or are we all about to have a bad time

- The docker container

postgres:15-alpinenow points to a new SHA! So a new release happened, and it was auto updated. ~9 days ago.

So, i fsck'd with postgres for a bit, and attempted to get it to use our customPostgresql configuration. This was the cause of the complete ~5 minute downtime. I took down everything except postgres to perform a backup because at this point I wasn't too certain what was going to be the cause of the issue.

You can never have too many backups! You don't agree, lets have a civil discussion over espressos in the comments.

I bought postgres back up with the max_connections to what it should have been (it was at the default? 100). And prayed to a deity that would alienate the least amount of people from reddthat :P

To no avail. Even with our postgres sql tuning the lemmy container I build was not performing under load as well as the developers container.

So I pretty much wasted 3 hours of my life, and reverted everything back.

Results

- All lemmy builds are not equal. Idk what the dev's are doing but when you follow the docs one would hope to expect it to work. I'm probably missing something...

- Postgres released a new

15-alpinedocker container 9 days ago, pretty sure no-one noticed, but did it break our custom configuration? or was our custom configuration always busted? (with reference to thelemmy-ansiblerepo here ) - I need a way to replay logs from our production server against our dev environment to generate the correct amount of load to catch these edge cases. (Anyone know of anything?)

At this point I'm unsure of a way forward to create the lemmy containers in a way that will perform the same as the developers ones, so instead of being one of the first instances to be able to upload video content, we might have to settle for video content when it comes. I've been chatting with the pict-rs dev, and I think we are on the same page relating to a path forward. Should be some easy wins coming in the next versions.

Final Finally

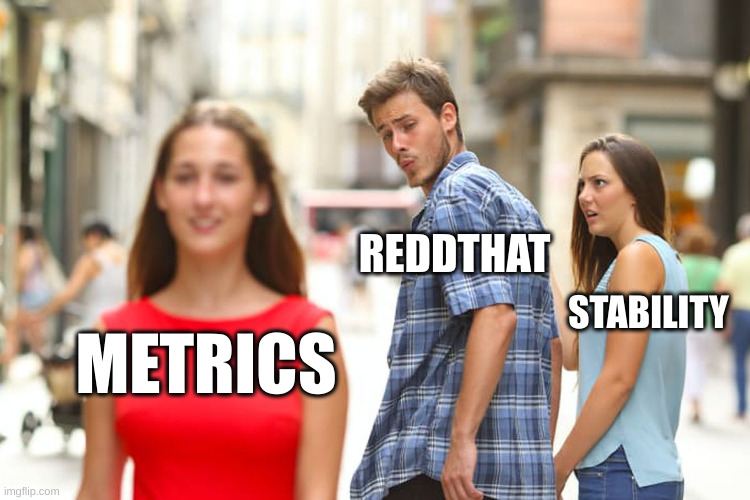

I'll be choosing stability from now on.

Tiff

Notes for other lemmy admins / me for testing later: postgres:15-alpine:

2 months ago sha: sha256:696ffaadb338660eea62083440b46d40914b387b29fb1cb6661e060c8f576636

9 days ago sha: sha256:ab8fb914369ebea003697b7d81546c612f3e9026ac78502db79fcfee40a6d049