This was a problem on reddit too. Anyone could create accounts - heck, I had 8 accounts:

one main, one alt, one "professional" (linked publicly on my website), and five for my bots (whose accounts were optimistically created, but were never properly run). I had all 8 accounts signed in on my third-party app and I could easily manipulate votes on the posts I posted.

I feel like this is what happened when you'd see posts with hundreds / thousands of upvotes but had only 20-ish comments.

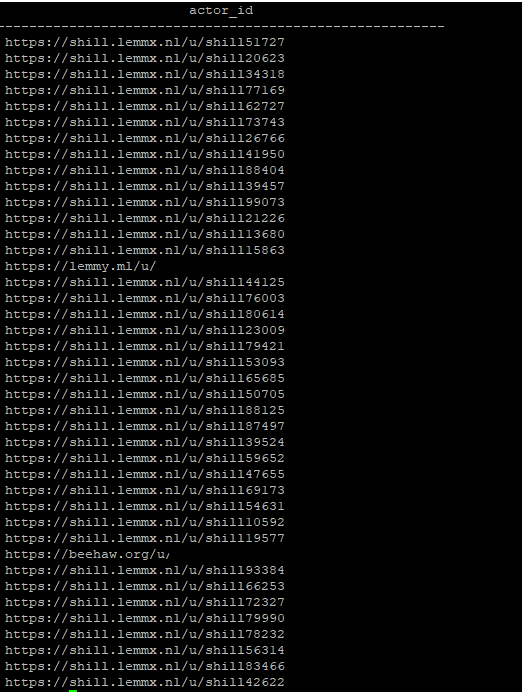

There needs to be a better way to solve this, but I'm unsure if we truly can solve this. Botnets are a problem across all social media (my undergrad thesis many years ago was detecting botnets on Reddit using Graph Neural Networks).

Fwiw, I have only one Lemmy account.