Recent advancements in text-to-image (T2I) and vision-language-to-image (VL2I)

generation have made significant strides. However, the generation from generalized

vision-language inputs, especially involving multiple images, remains

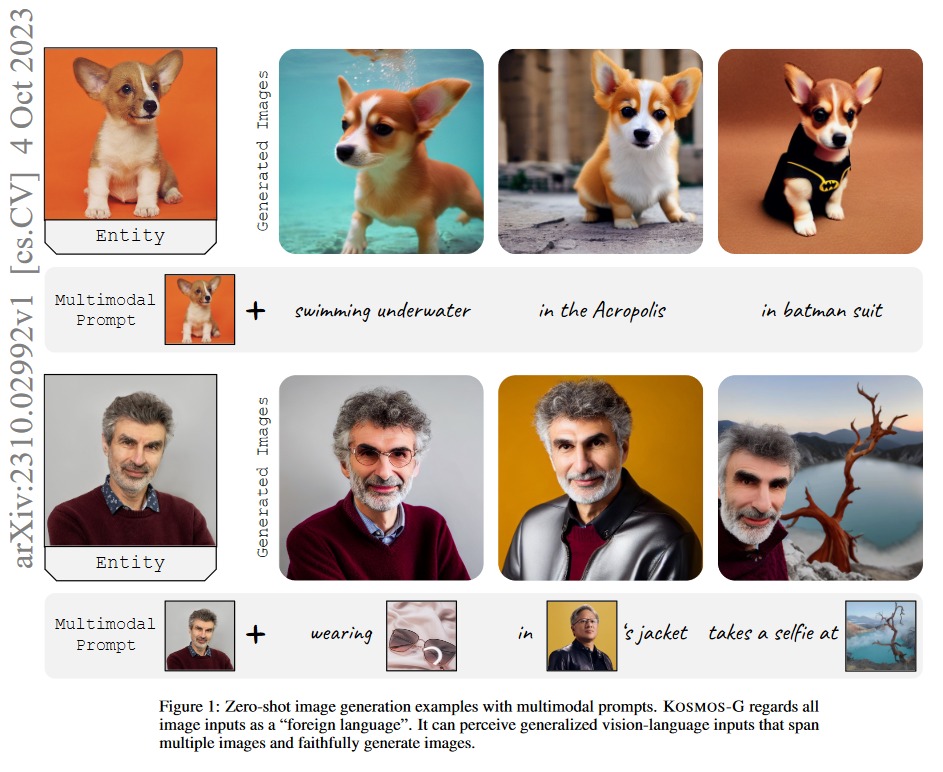

under-explored. This paper presents KOSMOS-G, a model that leverages the advanced

perception capabilities of Multimodal Large Language Models (MLLMs)

to tackle the aforementioned challenge. Our approach aligns the output space of

MLLM with CLIP using the textual modality as an anchor and performs compositional

instruction tuning on curated data. KOSMOS-G demonstrates a unique

capability of zero-shot multi-entity subject-driven generation. Notably, the score

distillation instruction tuning requires no modifications to the image decoder. This

allows for a seamless substitution of CLIP and effortless integration with a myriad

of U-Net techniques ranging from fine-grained controls to personalized image

decoder variants. We posit KOSMOS-G as an initial attempt towards the goal of

“image as a foreign language in image generation.” The code can be found at

https://aka.ms/Kosmos-G.

Am I exposing by lack of knowledge about this tech when I say that this seems to me like an early step along the way to a Star Trek style universal translator? Like, literally translating foreign languages on the fly from one to another?

You're kinda right. I saw this video a little while ago. I've linked it at the relevant part.

Here is an alternative Piped link(s):

this video

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I'm open-source; check me out at GitHub.

Thank you for sharing this talk! Literally sweating as the ramifications started to hit me while watching it. Probably the most profound video I have watched in many years.

PBS did a short video on this too. You might have seen it already.

Here is an alternative Piped link(s):

PBS

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I'm open-source; check me out at GitHub.