this post was submitted on 07 Oct 2023

991 points (97.7% liked)

Technology

59381 readers

3497 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

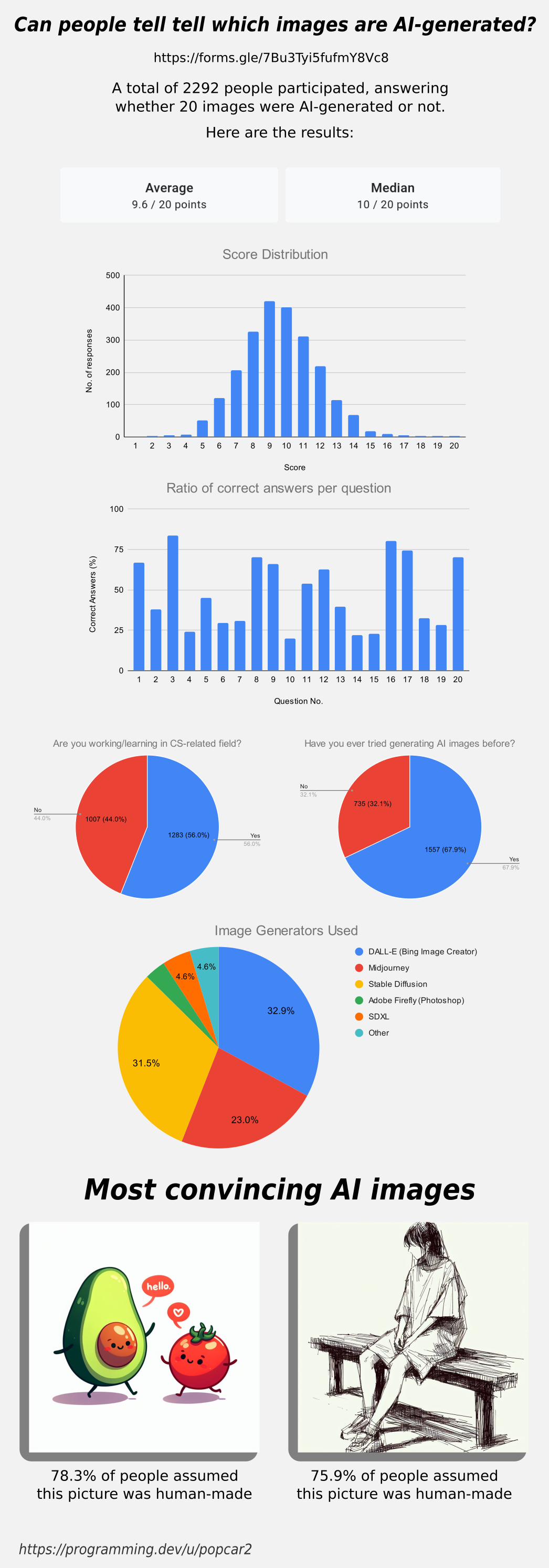

One thing I'd be interested in is getting a self assessment from each person regarding how good they believe themselves to have been at picking out the fakes.

I already see online comments constantly claiming that they can "totally tell" when an image is AI or a comment was chatGPT, but I suspect that confirmation bias plays a big part than most people suspect in how much they trust a source (the classic "if I agree with it, it's true, if I don't, then it's a bot/shill/idiot")

With the majority being in CS fields and having used ai image generation before they likely would be better at picking out than the average person

You'd think, but according to OP they were basically the same, slightly worse actually, which is interesting

The ones using image generation did slightly better

I was more commenting it to point out that it’s not necessary to find that person who can totally tell because they can’t

Even when you know what you are looking for, you are basically pixel hunting for artifacts or other signs that show it's AI without the image actually looking fake, e.g. the avocado one was easy to tell, as ever since DALLE1 avocado related things have been used as test images, the https://thispersondoesnotexist.com/ one was obvious due to how it was framed and some of the landscapes had that noise-vegetation-look that AI images tend to have. But none of the images look fake just by themselves, if you didn't specifically look for AI artifacts, it would be impossible to tell the difference or even notice that there is anything wrong with the image to begin with.

Right? A self-assessed skill which is never tested is a funny thing anyways. It boils down to "I believe I'm good at it because I believe my belief is correct". Which in itself is shady, but then there are also incentives that people rather believe to be good, and those who don't probably rather don't speak up that much. Personally, I believe people lack the competence to make statements like these with any significant meaning.