this post was submitted on 26 Aug 2024

33 points (100.0% liked)

Programmer Humor

19488 readers

753 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

I recently held a science slam about this topic! It's a mix of the first computer scientists being mathematicians, who love their abbreviations, and limited screen size, memory and file size. It's a trend in computing that has been well justified in the past, but has been making it harder for people to work together. And the need to use abbreviations has completely gone with the age of auto completion and language servers.

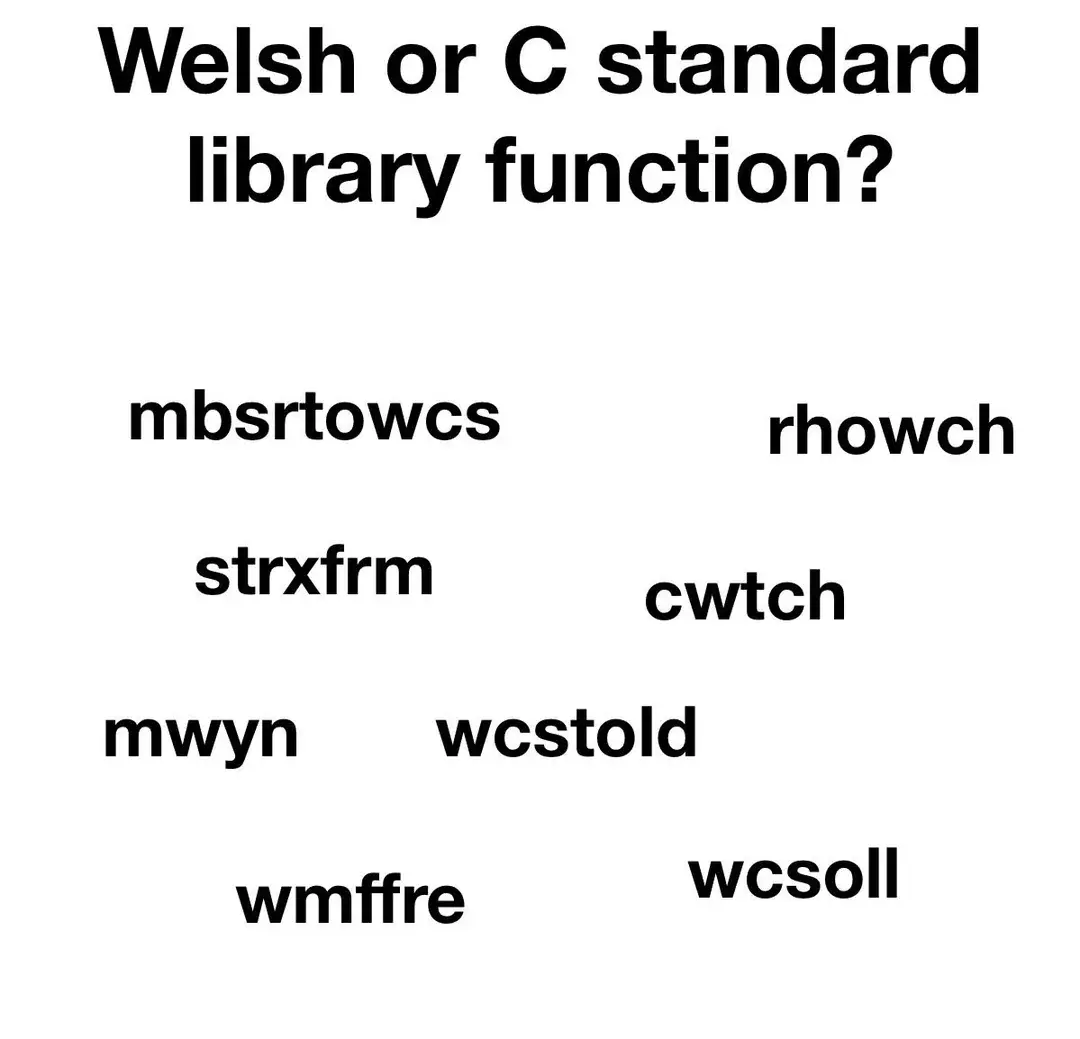

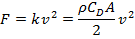

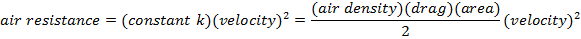

Man, I hate that so much. I swear this was half the reason I struggled with maths and physics, that these guys need to write this:

Rather than this:

At some point, they even collectively decided that not having to write a multiplication dot is more important than being able to use more than a single letter for your variables. Just what the fuck?

Thing is, you usually define all your variables. At least we do in engineering (of physical variety, rather than software).

Mostly because we can't expect everyone reading the calculation to know, and that not everyone uses the same symbols.

Not explaining each variable is bad practice, other than for very simple things. (I do expect everyone and their dog reading a process eng calc to know PV=nRT, at a minimum).

Just like (in my opinion) not defining industry specific abbreviations is also bad practice.

Mathematicians don't do this? Shame on them.

I mean, it was rather physics that was worse in this regard.

Mathematicians do define their variable quite rigorously. Everything is so abstract, at some point you do just need to write down "this thing is a number". Problem with maths folks is rather that they get more creative with their other symbols. So, "this thing is a number" is actually written as "∃x, x ∈ ℝ".

But yeah, in the school/university physics I experienced, it was assumed that you knew that U is voltage, ρ (rho) is density, ω (omega) is angular velocity etc..

At one point, I had to memorize six pages of formulas and it felt like every letter (Latin, Greek, uppercase, lowercase, some Fraktur for good measure) was a shorthand for something.

What's PV? Asking for my friend's dog.

(Pressure) * (volume) = (# moles) * (gas constant) * (temperature)

The ideal gas law.

In another thread I admit I didn't explain my position here well enough. I would only not explain this equation given sufficient context (e.g. I've shown all those variables in a table, and my intended audience is people familiar with basic chemistry, which I'd expect would be everyone reading the report for this particular example, since this is high school chemistry, and the topic of all reports I work on is chemical engineering.)

People can read the conclusions if they're not familiar with chemistry, and for the detail, they're not my intended audience anyway.

Generally I still hold the position that you should define variables as much as possible, unless it's overly cumbersome, given your intended audience would clearly understand anyway.

In context this simple equation is obvious even if you change the symbols, as long as there is sufficient context to draw from.

Try to write the above with pen and ink and then tell me if you can read it back yourself.

Single letters is not a good system but it was the less bad one.

The bottom is absolutely not more readable, and it's much more difficult to work with.

It's been really holding me back in learning coding. I felt pretty comfortable at first learning javascript, but as I got further the code was increasingly hard to look back to and understand, to the point I had to spend a lot of time understanding my own code.

Does it truely matter after the code has been compiled if it has more full words or not?

It matters as soon as a requirement change comes in and you have to change something. Writing a dirty ass incomprehensible, but working piece of code is ok, as long as no one touches it again.

But as soon as code has to be reworked, worked on together by multiple people, or you just want to understand what you did 2 weeks earlier, code readability becomes important.

I like Uncle Bobs Clean Code (with a grain of salt) for a general idea of what such an approach to make code readable could look like. However, it is controversial and if overdone, can achieve the opposite. I like it as a starting point though.