this post was submitted on 27 Jun 2024

778 points (95.0% liked)

Science Memes

11440 readers

288 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

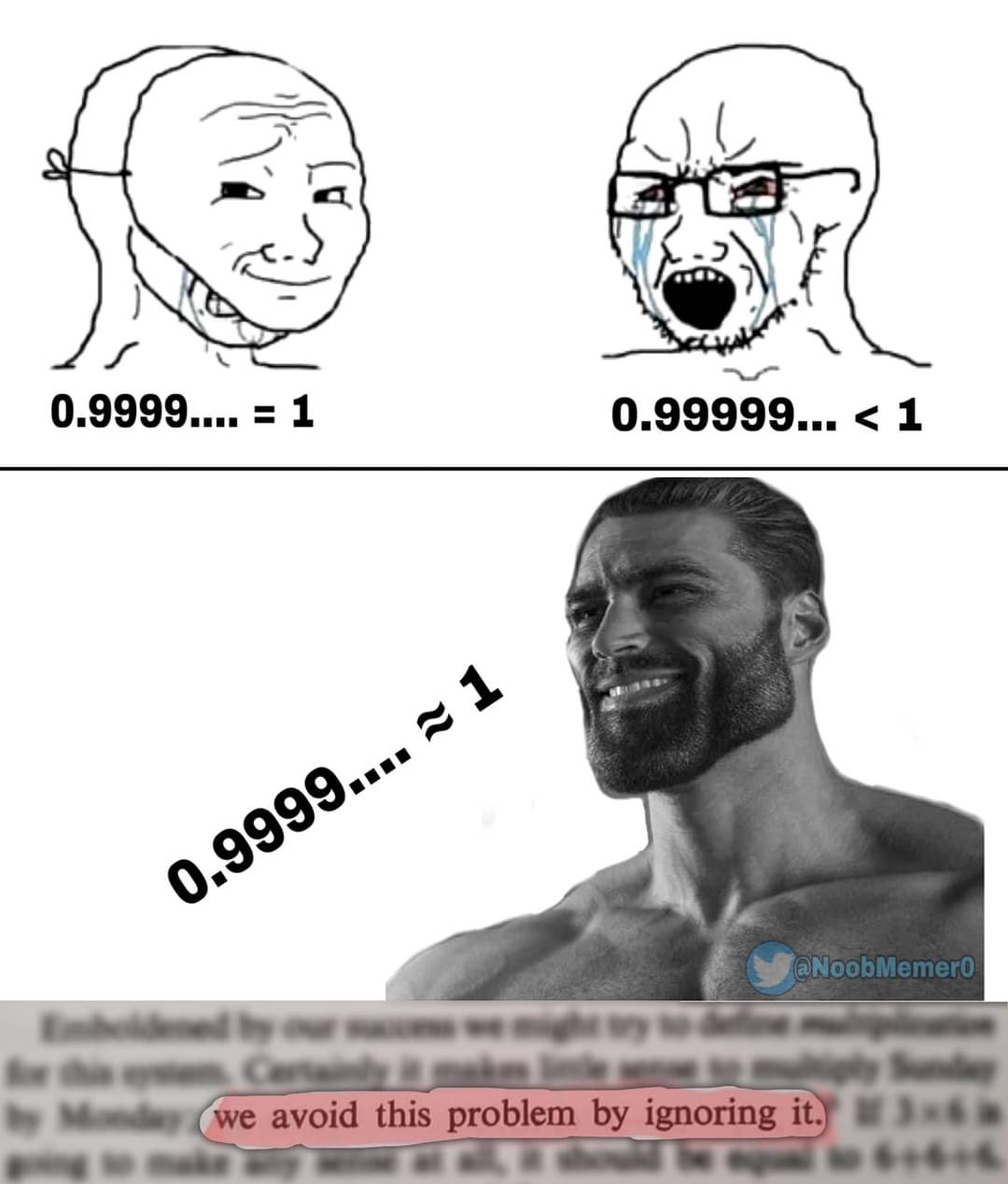

Are we still doing this 0.999.. thing? Why, is it that attractive?

People generally find it odd and unintuitive that it's possible to use decimal notation to represent 1 as .9~ and so this particular thing will never go away. When I was in HS I wowed some of my teachers by doing proofs on the subject, and every so often I see it online. This will continue to be an interesting fact for as long as decimal is used as a canonical notation.

Welp, I see. Still, this is way too much recurting of a pattern.

The rules of decimal notation don't sipport infinite decimals properly. In order for a 9 to roll over into a 10, the next smallest decimal needs to roll over first, therefore an infinite string of anything will never resolve the needed discrete increment.

Thus, all arguments that 0.999... = 1 must use algebra, limits, or some other logic beyond decimal notation. I consider this a bug with decimals, and 0.999... = 1 to be a workaround.

Please explain this in a way that makes sense to me (I'm an algebraist). I don't know what it would mean for infinite decimals to be supported "properly" or "improperly". Furthermore, I'm not aware of any arguments worth taking seriously that don't use logic, so I'm wondering why that's a criticism of the notation.

If you hear someone shout at a mob "mathematics is witchcraft, therefore, get the pitchforks" I very much recommend taking that argument seriously no matter the logical veracity.

Fair, but that still uses logic, it's just using false premises. Also, more than the argument what I'd be taking seriously is the threat of imminent violence.

But is it a false premise? It certainly passes Occam's razor: "They're witches, they did it" is an eminently simple explanation.

By definition, mathematics isn't witchcraft (most witches I know are pretty bad at math). Also, I think you need to look more deeply into Occam's razor.

By definition, all sufficiently advanced mathematics is isomorphic to witchcraft. (*vaguely gestures at numerology as proof*). Also Occam's razor has never been robust against reductionism: If you are free to reduce "equal explanatory power" to arbitrary small tunnel vision every explanation becomes permissible, and taking, of those, the simplest one probably doesn't match with the holistic view. Or, differently put: I think you need to look more broadly onto Occam's razor :)

Decimal notation is a number system where fractions are accomodated with more numbers represeting smaller more precise parts. It is an extension of the place value system where very large tallies can be expressed in a much simpler form.

One of the core rules of this system is how to handle values larger than the highest digit, and lower than the smallest. If any place goes above 9, set that place to 0 and increment the next place by 1. If any places goes below 0, increment the place by (10) and decrement the next place by one (this operation uses a non-existent digit, which is also a common sticking point).

This is the decimal system as it is taught originally. One of the consequences of it's rules is that each digit-wise operation must be performed in order, with a beginning and an end. Thus even getting a repeating decimal is going beyond the system. This is usually taught as special handling, and sometimes as baby's first limit (each step down results in the same digit, thus it's that digit all the way down).

The issue happens when digit-wise calculation is applied to infinite decimals. For most operations, it's fine, but incrementing up can only begin if a digit goes beyong 9, which never happens in the case of 0.999... . Understanding how to resolve this requires ditching the digit-wise method and relearing decimals and a series of terms, and then learning about infinite series. It's a much more robust and applicable method, but a very different method to what decimals are taught as.

Thus I say that the original bitwise method of decimals has a bug in the case of incrementing infinite sequences. There's really only one number where this is an issue, but telling people they're wrong for using the tools as they've been taught isn't helpful. Much better to say that the tool they're using is limited in this way, then showing the more advanced method.

That's how we teach Newtonian Gravity and then expand to Relativity. You aren't wrong for applying newtonian gravity to mercury, but the tool you're using is limited. All models are wrong, but some are useful.

Said a simpler way:

1/3= 0.333...

1/3 + 1/3 = 0.666... = 0.333... + 0.333...

1/3 + 1/3 + 1/3 = 1 = 0.333... + 0.333... + 0.333...

The quirk you mention about infinite decimals not incrementing properly can be seen by adding whole number fractions together.

I can't help but notice you didn't answer the question.

I'm sure I don't know what you mean by digit-wise operation, because my conceptuazation of it renders this statement obviously false. For example, we could apply digit-wise modular addition base 10 to any pair of real numbers and the order we choose to perform this operation in won't matter. I'm pretty sure you're also not including standard multiplication and addition in your definition of "digit-wise" because we can construct algorithms that address many different orders of digits, meaning this statement would also then be false. In fact, as I lay here having just woken up, I'm having a difficult time figuring out an operation where the order that you address the digits in actually matters.

Later, you bring up "incrementing" which has no natural definition in a densely populated set. It seems to me that you came up with a function that relies on the notation we're using (the decimal-increment function, let's call it) rather than the emergent properties of the objects we're working with, noticed that the function doesn't cover the desired domain, and have decided that means the notation is somehow improper. Or maybe you're saying that the reason it's improper is because the advanced techniques for interacting with the system are dissimilar from the understanding imparted by the simple techniques.

In base 10, if we add 1 and 1, we get the next digit, 2.

In base 2, if we add 1 and 1 there is no 2, thus we increment the next place by 1 getting 10.

We can expand this to numbers with more digits: 111(7) + 1 = 112 = 120 = 200 = 1000

In base 10, with A representing 10 in a single digit: 199 + 1 = 19A = 1A0 = 200

We could do this with larger carryover too: 999 + 111 = AAA = AB0 = B10 = 1110 Different orders are possible here: AAA = 10AA = 10B0 = 1110

The "carry the 1" process only starts when a digit exceeds the existing digits. Thus 192 is not 2Z2, nor is 100 = A0. The whole point of carryover is to keep each digit within the 0-9 range. Furthermore, by only processing individual digits, we can't start carryover in the middle of a chain. 999 doesn't carry over to 100-1, and while 0.999 does equal 1 - 0.001, (1-0.001) isn't a decimal digit. Thus we can't know if any string of 9s will carry over until we find a digit that is already trying to be greater than 9.

This logic is how basic binary adders work, and some variation of this bitwise logic runs in evey mechanical computer ever made. It works great with integers. It's when we try to have infinite digits that this method falls apart, and then only in the case of infinite 9s. This is because a carry must start at the smallest digit, and a number with infinite decimals has no smallest digit.

Without changing this logic radically, you can't fix this flaw. Computers use workarounds to speed up arithmetic functions, like carry-lookahead and carry-save, but they still require the smallest digit to be computed before the result of the operation can be known.

If I remember, I'll give a formal proof when I have time so long as no one else has done so before me. Simply put, we're not dealing with floats and there's algorithms to add infinite decimals together from the ones place down using back-propagation. Disproving my statement is as simple as providing a pair of real numbers where doing this is impossible.

Are those algorithms taught to people in school?

Once again, I have no issue with the math. I just think the commonly taught system of decimal arithmetic is flawed at representing that math. This flaw is why people get hung up on 0.999... = 1.

i don't think any number system can be safe from infinite digits. there's bound to be some number for each one that has to be represented with them. it's not intuitive, but that's because infinity isn't intuitive. that doesn't mean there's a problem there though. also the arguments are so simple i don't understand why anyone would insist that there has to be a difference.

for me the simplest is:

1/3 = 0.333...

so

3×0.333... = 3×1/3

0.999... = 3/3

the problem is it makes my brain hurt

honestly that seems to be the only argument from the people who say it's not equal. at least you're honest about it.

by the way I'm not a mathematically adept person. I'm interested in math but i only understand the simpler things. which is fine. but i don't go around arguing with people about advanced mathematics because I personally don't get it.

the only reason I'm very confident about this issue is that you can see it's equal with middle- or high-school level math, and that's somehow still too much for people who are too confident about there being a magical, infinitely small number between 0.999... and 1.

to be clear I'm not arguing against you or disagreeing the fraction thing demonstrates what you're saying. It just really bothers me when I think about it like my brain will not accept it even though it's right in front of me it's almost like a physical sensation. I think that's what cognitive dissonance is. Fortunately in the real world this has literally never come up so I don't have to engage with it.

no, i know and understand what you mean. as i said in my original comment; it's not intuitive. but if everything in life were intuitive there wouldn't be mind blowing discoveries and revelations... and what kind of sad life is that?

Any my argument is that 3 ≠ 0.333...

EDIT: 1/3 ≠ 0.333...

We're taught about the decimal system by manipulating whole number representations of fractions, but when that method fails, we get told that we are wrong.

In chemistry, we're taught about atoms by manipulating little rings of electrons, and when that system fails to explain bond angles and excitation, we're told the model is wrong, but still useful.

This is my issue with the debate. Someone uses decimals as they were taught and everyone piles on saying they're wrong instead of explaining the limitations of systems and why we still use them.

For the record, my favorite demonstration is useing different bases.

In base 10: 1/3 ≈ 0.333... 0.333... × 3 = 0.999...

In base 12: 1/3 = 0.4 0.4 × 3 = 1

The issue only appears if you resort to infinite decimals. If you instead change your base, everything works fine. Of course the only base where every whole fraction fits nicely is unary, and there's some very good reasons we don't use tally marks much anymore, and it has nothing to do with math.

you're thinking about this backwards: the decimal notation isn't something that's natural, it's just a way to represent numbers that we invented. 0.333... = 1/3 because that's the way we decided to represent 1/3 in decimals. the problem here isn't that 1 cannot be divided by 3 at all, it's that 10 cannot be divided by 3 and give a whole number. and because we use the decimal system, we have to notate it using infinite repeating numbers but that doesn't change the value of 1/3 or 10/3.

different bases don't change the values either. 12 can be divided by 3 and give a whole number, so we don't need infinite digits. but both 0.333... in decimal and 0.4 in base12 are still 1/3.

there's no need to change the base. we know a third of one is a third and three thirds is one. how you notate it doesn't change this at all.

I'm not saying that math works differently is different bases, I'm using different bases exactly because the values don't change. Using different bases restates the equation without using repeating decimals, thus sidestepping the flaw altogether.

My whole point here is that the decimal system is flawed. It's still useful, but trying to claim it is perfect leads to a conflict with reality. All models are wrong, but some are useful.

you said 1/3 ≠ 0.333... which is false. it is exactly equal. there's no flaw; it's a restriction in notation that is not unique to the decimal system. there's no "conflict with reality", whatever that means. this just sounds like not being able to wrap your head around the concept. but that doesn't make it a flaw.

Let me restate: I am of the opinion that repeating decimals are imperfect representations of the values we use them to represent. This imperfection only matters in the case of 0.999... , but I still consider it a flaw.

I am also of the opinion that focusing on this flaw rather than the incorrectness of the person using it is a better method of teaching.

I accept that 1/3 is exactly equal to the value typically represented by 0.333... , however I do not agree that 0.333... is a perfect representation of that value. That is what I mean by 1/3 ≠ 0.333... , that repeating decimal is not exactly equal to that value.

After reading this, I have decided that I am no longer going to provide a formal proof for my other point, because odds are that you wouldn't understand it and I'm now reasonably confident that anyone who would already understands the fact the proof would've supported.

Ah, typo. 1/3 ≠ 0.333...

It is my opinion that repeating decimals cannot properly represent the values we use them for, and I would rather avoid them entirely (kinda like the meme).

Besides, I have never disagreed with the math, just that we go about correcting people poorly. I have used some basic mathematical arguments to try and intimate how basic arithmetic is a limited system, but this has always been about solving the systemic problem of people getting caught by 0.999... = 1. Math proofs won't add to this conversation, and I think are part of the issue.

Is it possible to have a coversation about math without either fully agreeing or calling the other stupid? Must every argument about even the topic be backed up with proof (a sociological one in this case)? Or did you just want to feel superior?

Your opinion is incorrect as a question of definition.

You had in the previous paragraph.

Yes, however the problem is that you are speaking on matters that you are clearly ignorant. This isn't a question of different axioms where we can show clearly how two models are incompatible but resolve that both are correct in their own contexts; this is a case where you are entirely, irredeemably wrong, and are simply refusing to correct yourself. I am an algebraist understanding how two systems differ and compare is my specialty. We know that infinite decimals are capable of representing real numbers because we do so all the time. There. You're wrong and I've shown it via proof by demonstration. QED.

They are just symbols we use to represent abstract concepts; the same way I can inscribe a "1" to represent 3-2={ {} } I can inscribe ".9~" to do the same. The fact that our convention is occasionally confusing is irrelevant to the question; we could have a system whereby each number gets its own unique glyph when it's used and it'd still be a valid way to communicate the ideas. The level of weirdness you can do and still have a valid notational convention goes so far beyond the meager oddities you've been hung up on here. Don't believe me? Look up lambda calculus.