this post was submitted on 22 Jun 2024

711 points (98.6% liked)

Programmer Humor

19564 readers

1305 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

This application looks fine to me.

Clearly labeled sections.

Local on one side, remote on the other

Transfer window on bottom.

No space for anything besides function, is the joke going over my head?

I'm sure there's nothing wrong with the program at all =)

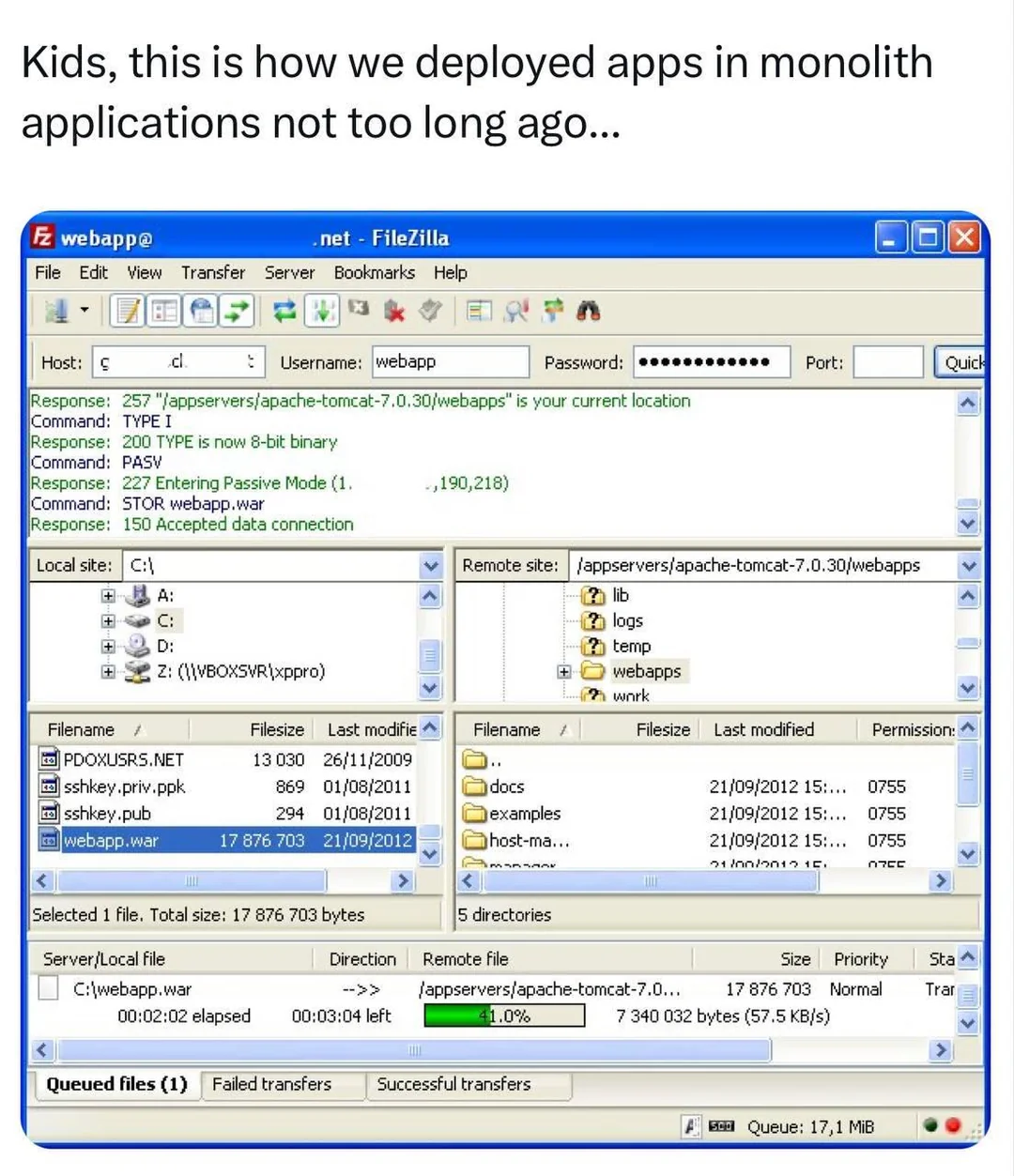

Modern webapp deployment approach is typically to have an automated continuous build and deployment pipeline triggered from source control, which deploys into a staging environment for testing, and then promotes the same precise tested artifacts to production. Probably all in the cloud too.

Compared to that, manually FTPing the files up to the server seems ridiculously antiquated, to the extent that newbies in the biz can't even believe we ever did it that way. But it's genuinely what we were all doing not so long ago.

But ... but I do that, and I'm only 18 :(

Old soul :)

That's probably okay! =) There's some level of pragmatism, depending on the sort of project you're working on.

If it's a big app with lots of users, you should use automation because it helps reliability.

If there are lots of developers, you should use automation because it helps keep everyone organised and avoids human mistakes.

But if it's a small thing with a few devs, or especially a personal project, it might be easier to do without :)

It's perfectly fine for some private page etc. but when you make business software for customers that require 99,9% uptime with severe contractual penalties it's probably too wonky.

Then switch to use sth more like scp ASAP? :-)

Nah, it's probably more efficient to .tar.xz it and use netcat.

On a more serious note, I use sftp for everything, and git for actual big (but still personal) projects, but then move files and execute scripts manually.

And also, I cloned my old Laptops /dev/sda3 to my new Laptops /dev/main/root (on /dev/mapper/cryptlvm) via netcat over a Gigabit connection with netcat. It worked flawlessly. I love Linux and its Philosophy.

Ooh I've never heard of it. netcat I mean, cause I've heard of Linux 😆.

The File Transfer Protocol is just very antiquated, while scp is simple. Possibly netcat is too:-).

Netcat is basically just a utility to listen on a socket, or connect to one, and send or receive arbitrary data. And as, in Linux, everything is a file, which means you can handle every part of your system (eg. block devices [physical or virtual disks]) like a normal file, i.e. text, you can just transfer a block device (e.g. /dev/sda3) over raw sockets.

Think of this like saying using a scythe to mow your lawn is antiquated. If your lawn is tiny then it doesn't really matter. But we're talking about massive "enterprise scale" lawns lol. You're gonna want something you can drive.

Like anything else, it's good to know how to do it in many different ways, it may help you down the line.

In production in an oddball environment, I have a python script to ftp transfer to a black box with only ftp exposed as an option.

Another system rebuilds nightly only if code changes, publishing to a QC location. QC gives it a quick review (we are talking website here, QC is "text looks good and nothing looks weird"), clicks a button to approve, and it gets published the following night.

I've had hardware (again, black box system) where I was able to leverage git because it was the only command exposed. Aka, the command they forgot to lock down and are using to update their device. Their intent was to sneakernet a thumb drive over to it for updates, I believe in sneaker longevity and wanted to work around that.

So you should know how to navigate your way around in FTP, it's a good thing! But I'd also recommend learning about all the other ways as well, it can help in the future.

(This comment brought to you by "I now feel older for having written it", and "I swear I'm only in my fourties,")

Not to rub it in, but in my forties could be read as almost the entirety of the modern web was developed during my adulthood.

It could, but I'm in my early 40s.

I just started early with a TI-99/4A, then a 286, before building my own p133.

So the "World Wide Web!" posters were there for me in middle school.

Still old lol

Promotes/deploys are just different ways of saying file transfer, which is what we see here.

Nothing was stopping people from doing cicd in the old days.

Sure, but having a hands-off pipeline for it which runs automatically is where the value is at.

Means that there's predictability and control in what is being done, and once the pipeline is built it's as easy as a single button press to release.

How many times when doing it manually have you been like "Oh shit, I just FTPd the WRONG STUFF up to production!" - I know I have. Or even worse you do that and don't notice you did it.

Automation takes a lot of the risk out.

Not to mention the benefits of versioning and being able to rollback! There's something so satisfying about a well set-up CI/CD pipeline.

We did versioning back in the day too. $application copied to $application.old

But was $application.old_final the one to rollback to, or $application.old-final2?!

Jokes on you, my first job was editing files directly in production. It was for a webapp written in Classic ASP. To add a new feature, you made a copy of the current version of the page (eg

index2_new.aspbecameindex2_new_v2.asp) and developed your feature there by hitting the live page with your web browser.When you were ready to deploy, you modified all the other pages to link to your new page

Good times!

Yes, exactly that.

Shitty companies did it like that back then - and shitty companies still don't properly utilize what easy tools they have available for controlled deployment nowayads. So nothing really changed, just that the amount of people (and with that, amount of morons) skyrocketed.

I had automated builds out of CVS with deployment to staging, and option to deploy to production after tests over 15 years ago.

What is "tests"?

Tests is the industry name for the automated paging when production breaks

Huh? Isn't this something that runs on the server?

It's good practice to run the deployment pipeline on a different server from the application host(s) so that the deployment instances can be kept private, unlike the public app hosts, and therefore can be better protected from external bad actors. It is also good practice because this separation of concerns means the deployment pipeline survives even if the app servers need to be torn down and reprovisioned.

Of course you will need some kind of agent running on the app servers to be able to receive the files, but that might be as simple as an SSH session for file transfer.

Thats how you know its old. Its not caked full of ads, insanely locked down, and trying yo sell you a subscription service.

Except that FileZilla does come with bundled adware from their sponsors and they do want you to pay for the pro version. It probably is the shittiest GPL-licensed piece of software I can think of.

https://en.wikipedia.org/wiki/FileZilla#Bundled_adware_issues

Aw that sucks

It even has questionably-helpful mysterious blinky lights at the bottom right which may or may not do anything useful.

The joke isn't the program itself, it's the process of deploying a website to servers.

The large .war (Web ARchive) being uploaded monolithicly is the archaic deployment of a web app. Modern tools can be much better.

Of course, it's going to be difficult to find a modern application where each individually deployed component isn't at least 7MB of compiled source (and 50-200MB of container), compared to this single 7MB

warthat contained everything.And then confused screaming about all the security holes.