this post was submitted on 28 May 2024

441 points (94.5% liked)

Technology

59232 readers

4219 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

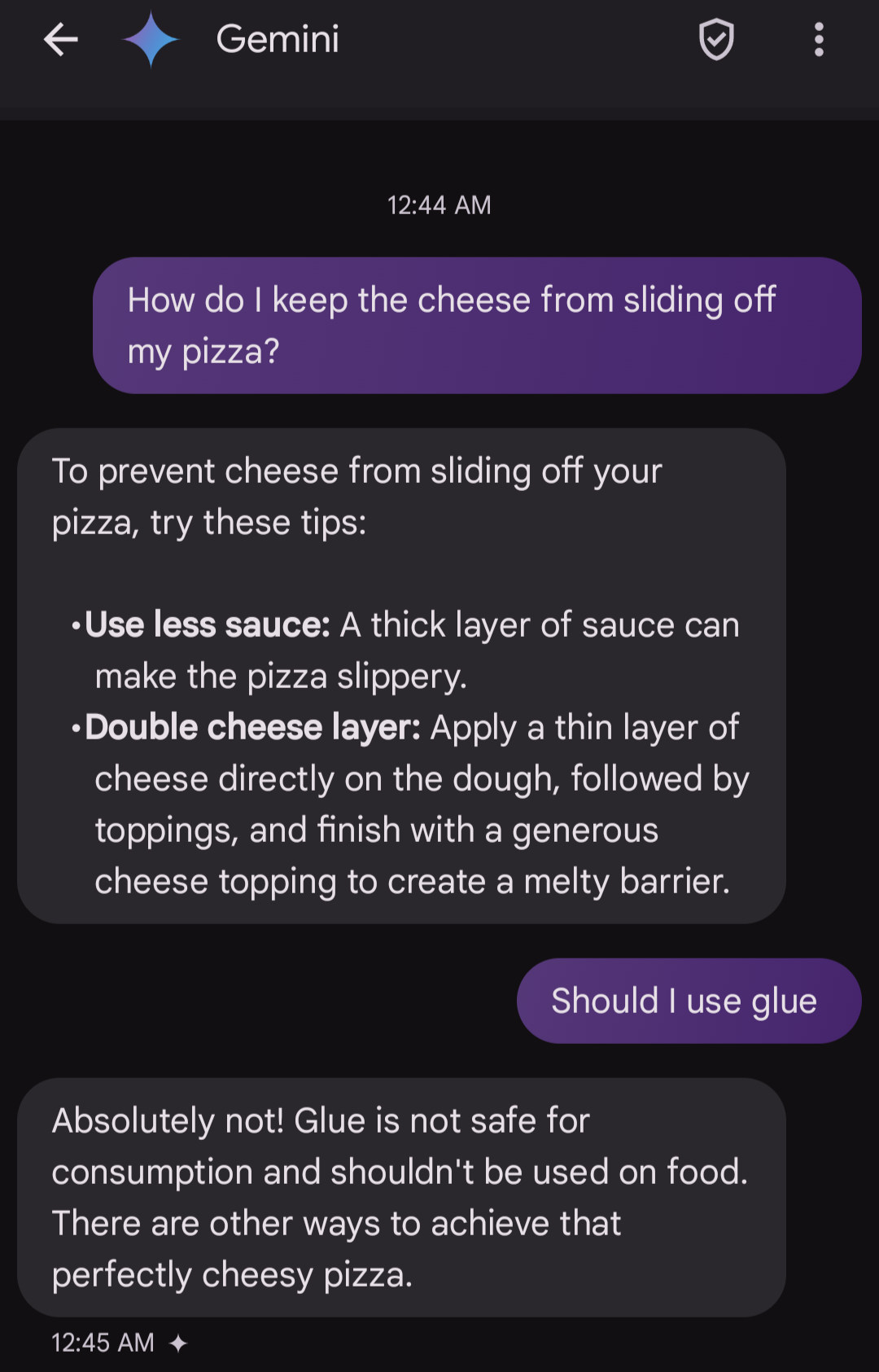

That's because this isn't something coming from the AI itself. All the people blaming the AI or calling this a "hallucination" are misunderstanding the cause of the glue pizza thing.

The search result included a web page that suggested using glue. The AI was then told "write a summary of this search result", which it then correctly did.

Gemini operating on its own doesn't have that search result to go on, so no mention of glue.

Not quite, it is an intelligent summary. More advanced models would realize that is bad advice and not give it. However for search results, google uses a lightweight, dumber model (flash) which does not realize this.

I tested with rock example, albiet on a different search engine (kagi). The base model gave the same answer as google (ironically based on articles about google's bad results, it seems it was too dumb to realize that the quotations in the articles were examples of bad results, not actual facts), but the more advanced model understood and explained how the bad advice had been spreading around and you should not follow it.

It isn't a hallucination though, you're right about that

Can you explain what this is?

There are 3 options. You can pick 2.

Google, as with most businesses, chose option 2 and 3.

I understand this, the user had said it like you can just switch to "advanced AI mode" at will, which I'm curious about.

Yeah it's called the "research assistant" I think. Uses GPT4-o atm.

Thank you