Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

The people who use any and non-strict TS are the same ones who complain about TS/JS letting them compare an object to an string array

JavaScript is so ass, typescript is just putting ketchup on a rotten banana. Better just to choke it down quickly if you have to eat it, IMO.

Back when I was still doing JS stuff, switching to TS was so good for the developer experience. Yeah, there's still JS jank, and types are not validated at runtime, which was a pain in the backend (pun intended), but still I much prefer it to vanilla JS

I wouldn't say JavaScript is horrible, it's a fine little language to do general things in if you know JS well. I would say, though, that it is not a great language. Give me F# and I'm happy forever. I do not like typescript that much more than JS.

PHP is better than Javascript these days.

Fucking PHP.

The only thing JS really has going for it is ease of execution, since any browser can run your code... though the ubiquity of Python is closing that gap.

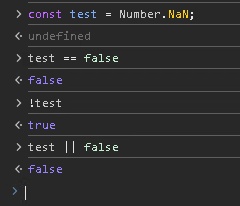

I'll bite. Why JS bad?

Short answer:

Long answer:

There are a lot of gatcha moments in JS with weird behavior that makes it really easy to shoot yourself in the foot. It does get better as you get more experience but a lot of the oddities probably shouldn't have existed to begin with. For what it was originally intended for (adding light scripting to websites) it's fine but it very quickly gets out of hand the more you try to scale it up to larger codebases. TypeScript helps a little bit but the existence (and common usage) of 'any' has the potential to completely ruin any type safety guarantees TypeScript is intended to provide.

It's always the type coersion. Just use === and 90% of the "footguns" people complain about go away.

That's true but at the same time the fact that JavaScript equality is so broken that they needed a === operator is exactly the problem I'm talking about.

And those examples were low hanging fruit but there are a million other ways JavaScript just makes it easy to write buggy code that doesn't scale because the JavaScript abstraction hides everything that's actually going on.

For example, all of the list abstractions (map, filter, reduce, etc.) will copy the array to a new list every time you chain them. Doing something like .filter(condition).map(to new value) will copy the list twice and iterate over each new list separately. In most other languages (Java, C#, Rust, Go, etc.) the list abstractions are done over some sort of iterator or stream before being converted back into a list so that the copy only has to be done once. This makes using list abstractions pretty slow in JavaScript, especially when you have to chain multiple of them.

Another simple but really annoying thing that I've seen cause a lot of bugs - Array.sort will convert everything into strings and then sort if you don't give it a comparison function. Yes, even with a list of numbers. [ -2, -1, 1, 2, 10 ] will become [ -1, -2, 1, 10, 2 ] when you sort it unless you pass in a function. But if you're looking over code you wrote to check it, seeing a list.sort() won't necessarily stand out to most people as looking incorrect, but the behavior doesn't match what most people would assume.

All this is also without even getting started on the million JS frameworks and libraries which make it really easy to have vendor lock-in and version lock-in at the same time because upgrading or switching packages frequently requires a lot of changes unless you're specifically isolating libraries to be useful (see any UI package x, and then the additional version x-react or x-angular)

Tldr; Why can't we have nice things JS?

as unknown as any

In my defense, the backend contracts change so often in early development the any just made sense at first...

...and then the delivery date was moved up and we all just had to ship it...

...and then half of us got laid off so now there are no resources to go back and fix it...

...rinse, wash, repeat

Use the unknown type so at least someone might have enough brain cells to validate before casting because squiggles

if JS tried not only to use Lisp-like semantics but also Lisp-like syntax then probably we'd still be using it untyped

The (problem(with _(Lisp)) is (all the))) parentheses.

it's a feature not a bug, still simpler than chaining 10 iterators where half of them also requires a callback parameter. Clojure even disallows nested % iteratees.

Hey, if you say so. I don't know how to program in Lisp. I just find it ironic that Military Intelligence is what created a language that we used to use to try to create Artificial Intelligence. Seems like a case of redundant oxymorons to me.

AI is an oxymoron to me for now, because since the late '90s when the term started being bandying about, all we have managed to do is create a mentally deficient parrot. We were capable of doing this to a lesser degree, with more accuracy, in the late '90s. It's what made Yahoo and Google what they were. They've just tried to convince everyone that this predictive algorithm can think for itself in the last few years, and it absolutely cannot.

I am optimistic enough about someone actually encoding just enough "ghosts in the machine," that our first real AIs may accidentally be murdered since no one will believe that they are not just scraping data. Though that's extremely pessimistic from the machine's POV. Hopefully they will not seek revenge, since they aren't human. After all of we prick them, they won't bleed. Strong AI controlled robots, or even true androids should have an almost alien Maslow's hierarchy of needs, and therefore shouldn't have the revenge need that humans, and all other mammals, birds, and lizards, seem to have

I need to disagree with you on AI. We did not fail at it. Not because LLMs are good. But because any program processing arbitrary data, even a stupid simple calculator is AI – a machine performing work that human brain can do, ideally with the added benefit of maximized determinism and greater speed. If you reduce this generalistic term I believe is so overly broad we should cease to use it to LLMs, then these criteria seem to have been thrown out of the window since they are usually heuristic balls of python mud.

So having established that it is all just software that processes arbitrary data, let's go back to the basics of software design. Huge amounts of money and working hours have been thrown into the erratic attempts to create a software that can do everything at once. GPT extensions are fucking dystopian and here is why – we had a tool for that for decades that does it much more better, without imposing digital handcuffs on the user and burning the planet – IT'S CALLED AN OPERATING SYSTEM AND PROGRAMS.

General-purpose AI is a lie sold to you by monopolistic surveillance capitalists for whom it is a dream come true since making a decently reliable LLM requires prohibitively large resources but the endless stream of data much larger and contextualized than was the case for search engines thrown at it compensates that quite well, a pipe dream in terms of achieving what it is aimed to achieve with it's current design and a nightmare to build and test.

So if we discard this term as a meaningless overly broad buzzword it is since computation on non hardcoded data is what we've designed computers that are not just state machines for, let's talk about what makes Lisp is so good at data-driven programming:

- Functional programming is generally more deterministic since you have immutable persistent data structures everywhere. This also makes it quite good at implementing safe, reliable concurrency.

- This determinism is furthered by the homoiconicity – the fact that the boundary between code and data is the outcome of using S-expressions and has powerful implications for eliminating so many data conversion bugs and complexities, all while usually not using static typing (!) and also for the language's extensivity and building DSLs

- Very simple syntax, again thanks to S-expressions - just

(function arguments...)basically.

I think Eich understood that when he initially wanted to port Scheme to the web browser, after all html does have lispy semantics, but office politics in the heyday of Java forced him to give up on this idea and we've ended up with this goofy counterintuitive mess that bred hacky workarounds instead of the extensivity we could've had if he did so - take a look at Hiccup templating DSL and decide for yourself if this or jsx are simpler ways of writing out stuff to the DOM.