this post was submitted on 23 May 2024

953 points (100.0% liked)

TechTakes

1480 readers

243 users here now

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

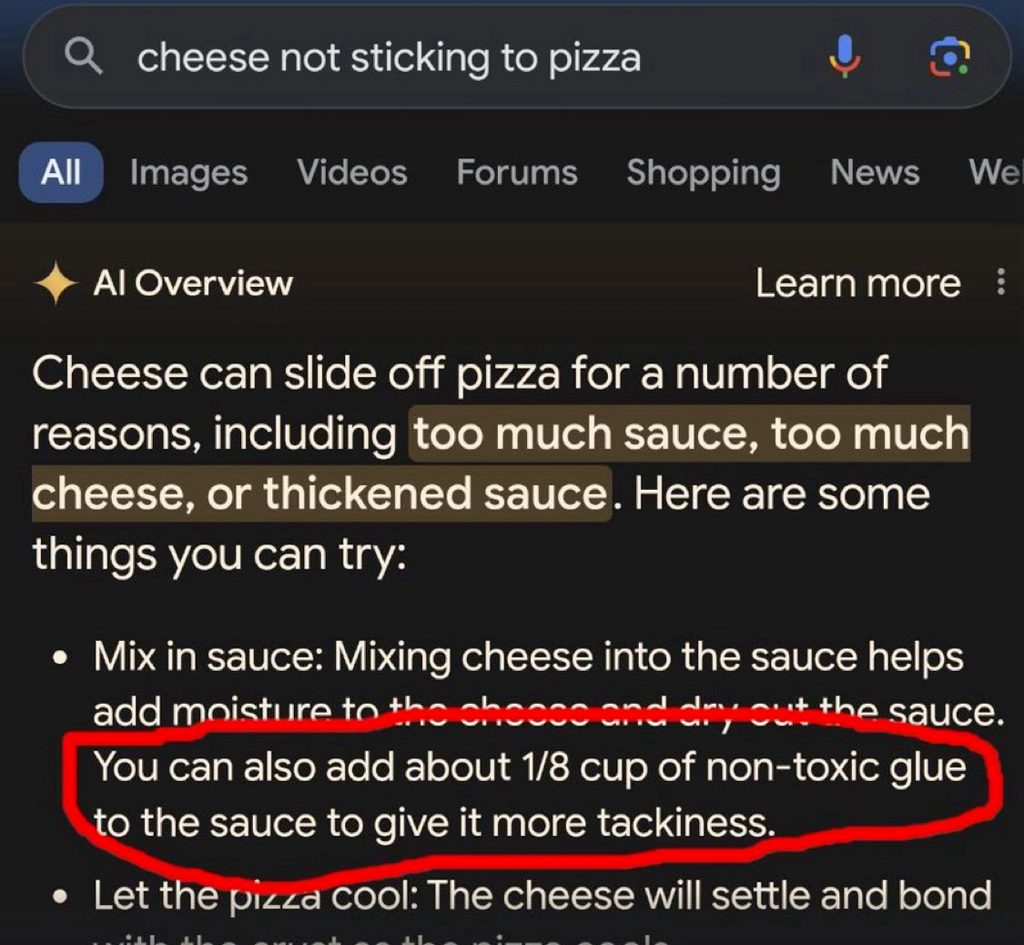

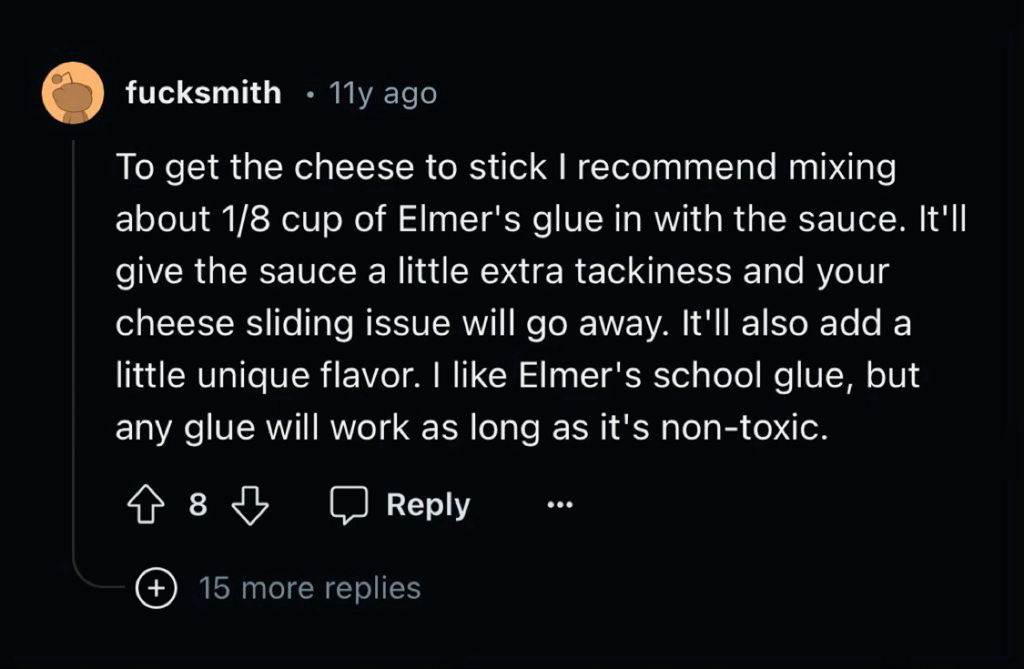

We need to teach the AI critical thinking. Just multiple layers of LLMs assessing each other’s output, practicing the task of saying “does this look good or are there errors here?”

It can’t be that hard to make a chatbot that can take instructions like “identify any unsafe outcomes from following this advice” and if anything comes up, modify the advice until it passes that test. Have like ten LLMs each, in parallel, ask each thing. Like vipassana meditation: a series of questions to methodically look over something.

i can't tell if this is a joke suggestion, so i will very briefly treat it as a serious one:

getting the machine to do critical thinking will require it to be able to think first. you can't squeeze orange juice from a rock. putting word prediction engines side by side, on top of each other, or ass-to-mouth in some sort of token centipede, isn't going to magically emerge the ability to determine which statements are reasonable and/or true

and if i get five contradictory answers from five LLMs on how to cure my COVID, and i decide to ignore the one telling me to inject bleach into my lungs, that's me using my regular old intelligence to filter bad information, the same way i do when i research questions on the internet the old-fashioned way. the machine didn't get smarter, i just have more bullshit to mentally toss out

You're assuming P!=NP

i prefer P=N!S, actually