This isn't a sneer, more of a meta take. Written because I sit in a waiting room and is a bit bored, so I'm writing from memory, no exact quotes will be had.

A recent thread mentioning "No Logo" in combination with a comment in one of the mega-threads that pleaded for us to be more positive about AI got me thinking. I think that in our late stage capitalism it's the consumer's duty to be relentlessly negative, until proven otherwise.

"No Logo" contained a history of capitalism and how we got from a goods based industrial capitalism to a brand based one. I would argue that "No Logo" was written in the end of a longer period that contained both of these, the period of profit driven capital allocation. Profit, as everyone remembers from basic marxism, is the surplus value the capitalist acquire through paying less for labour and resources then the goods (or services, but Marx focused on goods) are sold for. Profits build capital, allowing the capitalist to accrue more and more capital and power.

Even in Marx times, it was not only profits that built capital, but new capital could be had from banks, jump-starting the business in exchange for future profits. Thus capital was still allocated in the 1990s when "No Logo" was written, even if the profits had shifted from the good to the brand. In this model, one could argue about ethical consumption, but that is no longer the world we live in, so I am just gonna leave it there.

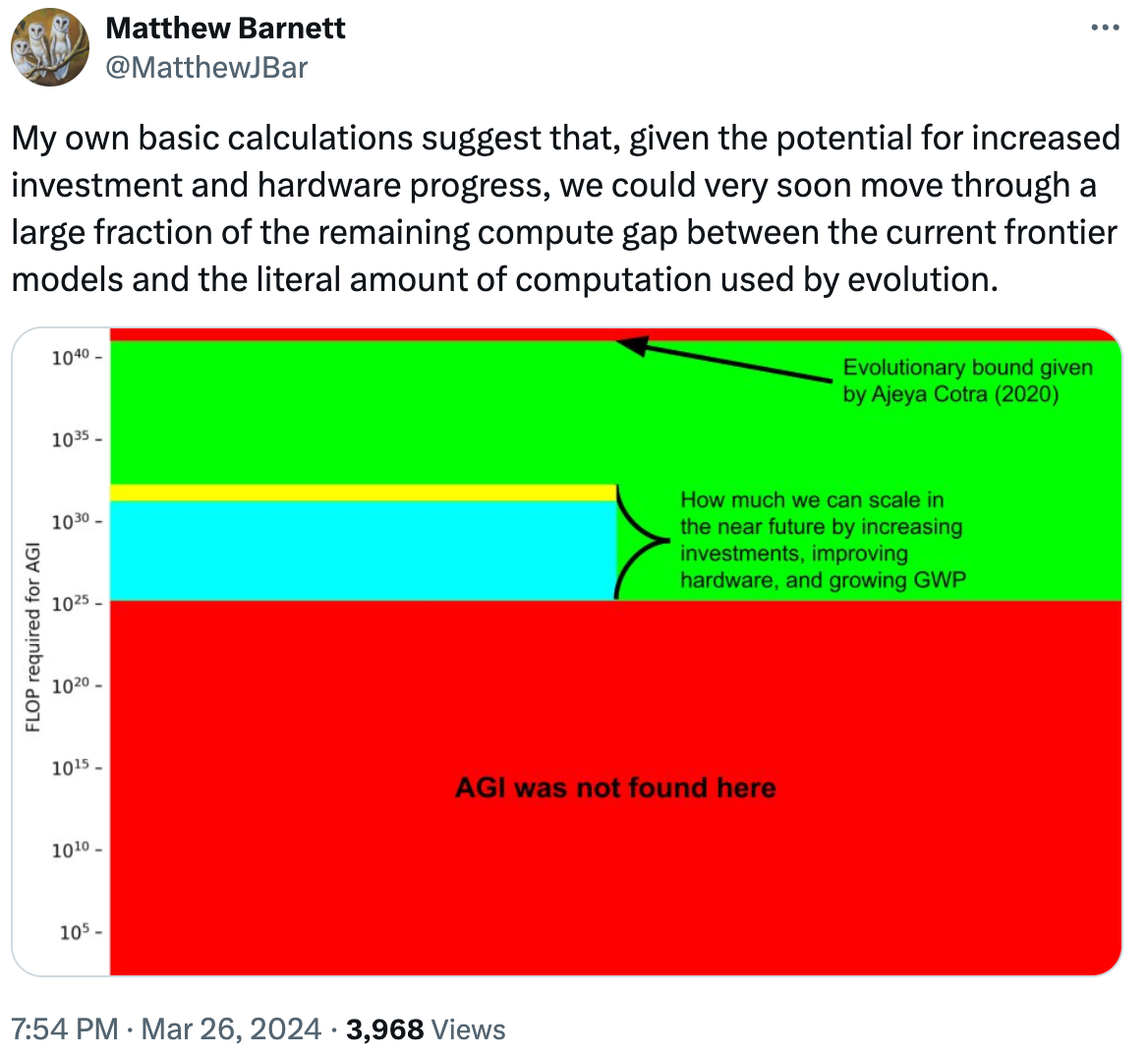

In the 1990s there was also a tech bubble were capital allocation was following a different logic. The bubble logic is that capital formation is founded on hype, were capital is allocated to increase hype in hopes of selling to a bigger fool before it all collapses. The bigger the bubble grows, the more institutions are dragged in (by the greed and FOMO of their managers), like banks and pension funds. The bigger the bubble, the more it distorts the surrounding businesses and legislation. Notice how now that the crypto bubble has burst, the obvious crimes of the perpetrators can be prosecuted.

In short, the bigger the bubble, the bigger the damage.

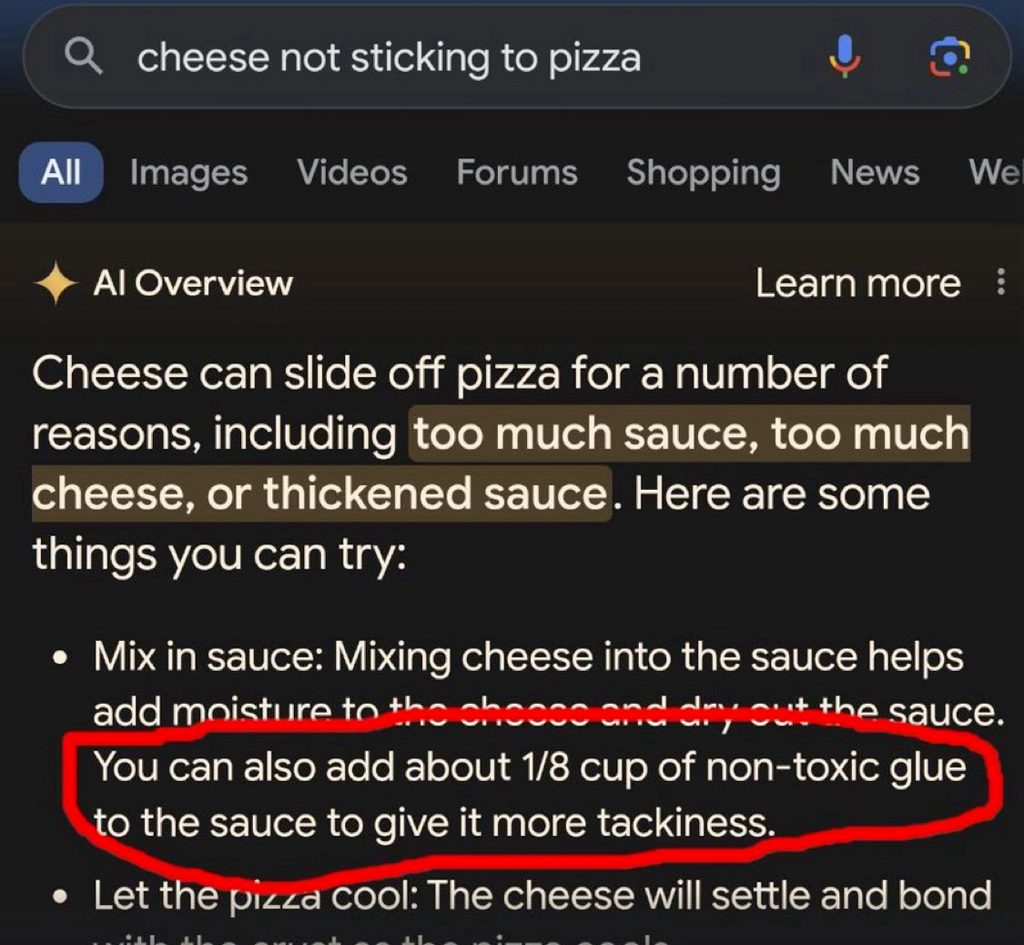

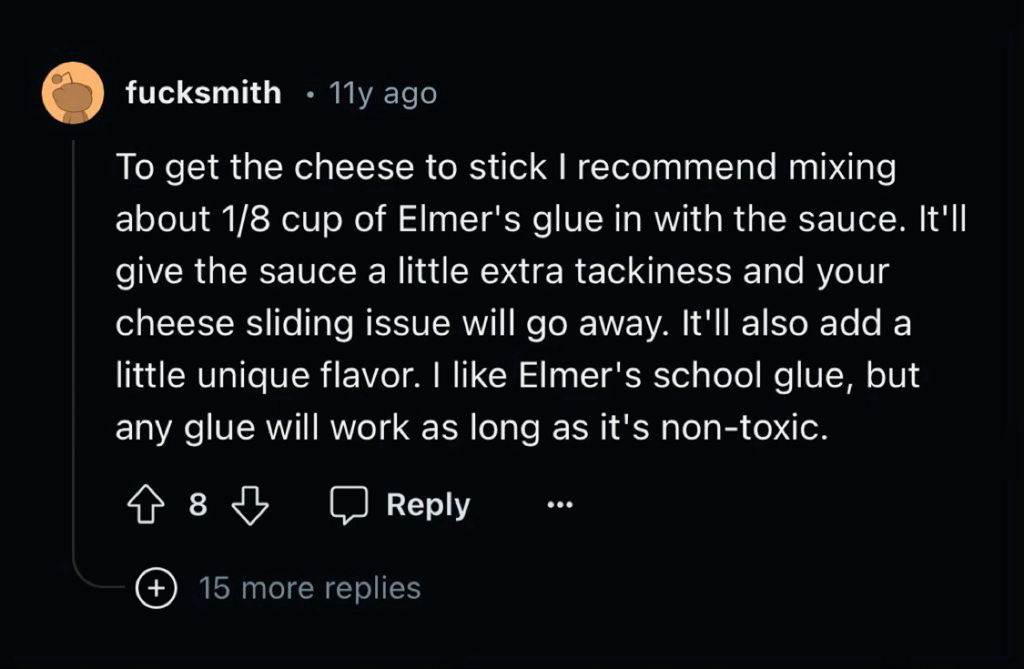

If in a profit driven capital allocation, the consumer can deny corporations profit, in the hype driven capital allocation, the consumer can deny corporations hype. To point and laugh is damage minimisation.