Technically this isn't actually a seafile issue, however the upload client really should have the ability to run checksums to compare the original file to the file that is being synced to the server (or other device).

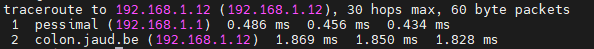

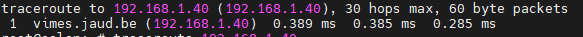

I run docker in a VM that is hosted by proxmox. Proxmox manages a ZFS array which contains the primary storage that the VM uses. Instead of making the VM disk 1TB+, the VM disk is relatively small since its only the OS (64GB) and the docker containers mount a folder on the ZFS array itself which is several TBs.

This has all been going really well with no issues, until yesterday when I tried to access some old photos and the photos would only load half way. The top part would be there but the bottom half would be grey/missing.

This seemed to be randomly present on numerous photos, however some were normal and others had missing sections. Digging deeper, some files were also corrupt and would not open at all (PDFs, etc).

Badness alert....

All my backups come from the server. If the server data has been corrupt for a long time, then all the backups would be corrupt as well. All the files on the seafile server originally were synced from my desktop so when I open the file locally on the desktop it all works fine, only when I try to open the file on seafile does it fail. Also not all the files were failing only some. Some old, some new. Even the file sizes didn't seem to consistently predict if it would work on not.

Its now at the point where I can take a photo from my desktop, drag it into a seafile library via the browser and it shows successful upload, but then trying to preview the file won't work and downloading that very same file back again shows the file size about 44kb regardless of the original file size.

Google/DDG...can't find anyone that has the same issue...very bad

Finally I notice an error in mariadb: "memory pressure can't write to disk" (paraphrased).

Ok, that's odd. The ram was fine which is what I assumed it was. HD space can't be the issue since the ZFS array is only 25% full and both mariadb and seafile only have volumes that are on the zfs array. There are no other volumes...or is there???

Finally in portainer I'm checking out the volumes that exist, seafile only has the two as expected, data and database. Then I see hundreds of unused volumes.

Quick google reveals docker volume purge which deletes many GBs worth of volumes that were old and unused.

By this point, I've already created and recreated the seafile docker containers a hundred times with test data and simplified the docker compose as much as possible etc, but it started working right away. Mariadb starts working, I can now copy a file from the web interface or the client and it will work correctly.

Now I go through the process of setting up my original docker compose with all the extras that I had setup, remake my user account (luckily its just me right now), setup the sync client and then start copying the data from my desktop to my server.

I've got to say, this was scary as shit. My setup uploads files from desktop, laptop, phone etc to the server via seafile, from there borg backup takes incremental backups of the data and sends it remotely. The second I realized that local data on my computer was fine but the server data was unreliable I immediately knew that even my backups were now unreliable.

IMHO this is a massive problem. Seafile will happily 'upload' a file and say success, but then trying to redownload the file results in an error since it doesn't exist.

Things that really should be present to avoid this:

- The client should have the option to run a quick checksum on each file after it uploads and compare the original to the uploaded one to ensure data consistency. There should probably be an option to do this afterwards as well as a check. Then it can output a list of files that are inconsistent.

- The default docker compose should be run with health checks on mariadb so when it starts throwing errors but the interface still runs, someone can be alerted.

- Need some kind of reminder to check in on unused docker containers.